Container Configuration Manual

The Logagent container can be configured to include/exclude logs collection from any container in your cluster. Additionally, you can also configure log routing and advanced log parsing to fit your needs.

Blacklisting and Whitelisting Logs¶

Not all logs might be of interest, so sooner or later you will have the need to blacklist some log types. This is one of the reasons why Logagent automatically adds the following tags to all logs:

- Container ID

- Container Name

- Image Name

- Docker Compose Project Name

- Docker Compose Service Name

- Docker Compose Container Number

Using this “log metadata” you can whitelist or blacklist log outputs by image or container names. The relevant environment variables are:

- MATCH_BY_NAME — a regular expression to whitelist container names

- MATCH_BY_IMAGE — a regular expression to whitelist image names

- SKIP_BY_NAME — a regular expression to blacklist container names

- SKIP_BY_IMAGE — a regular expression to blacklist image names

Some log messages are useless or noisy, like access to health check URLs in Kubernetes. You can filter such messages via regular expressions by setting the following environment variable:

IGNORE_LOGS_PATTERN=\/healthcheck|\/ping

Container Log Parsing¶

In Docker, logs are console output streams from containers. They might be a mix of plain text messages from start scripts and structured logs from applications. The problem is obvious – you can’t just take a stream of log events all mixed up and treat them like a blob. You need to be able to tell which log event belongs to what container, what app, parse it correctly in order to structure it so you can later derive more insight and operational intelligence from logs, etc.

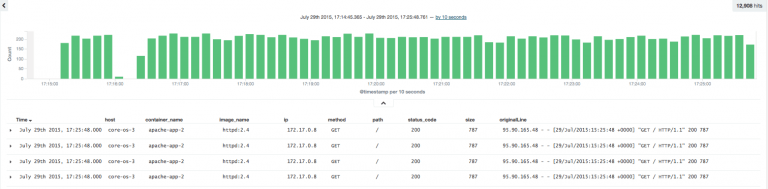

Logagent analyzes the event format, parses out data, and turns logs into structured JSON. This is important because the value of logs increases when you structure them — you can then slice and dice them and gain a lot more insight about how your containers, servers, and applications operate.

Traditionally it was necessary to use log shippers like Logstash, Fluentd or Rsyslog to parse log messages. The problem is that such setups are typically deployed in a very static fashion and configured for each input source. That does not work well in the hyper-dynamic world of containers! We have seen people struggling with the Syslog drivers, parsers configurations, log routing, and more! With its integrated automatic format detection, Logagent eliminates this struggle — and the waste of resources — both computing and human time that goes into dealing with such things! This integration has a low footprint, doesn’t need retransmissions of logs to external services, and it detects log types for the most popular applications and generic JSON and line-oriented log formats out of the box!

For example, Logagent can parse logs from official images like:

- Nginx, Apache, Redis, MongoDB, MySQL

- Elasticsearch, Solr, Kafka, Zookeeper

- Hadoop, HBase, Cassandra

- Any JSON output with special support for Logstash or Bunyan format

- Plain text messages with or without timestamps in various formats

- Various Linux and Mac OSX system logs

Adding log parsing patterns¶

In addition, you can define your own patterns for any log format you need to be able to parse and structure. There are three options to pass individual log parser patterns:

- Configuration file in a mounted volume:

-v PATH_TO_YOUR_FILE:/etc/logagent/patterns.yml - Kubernetes ConfigMap example: Template for patterns.yml as ConfigMap

- Content of the configuration file in an environment variable:

-e LOGAGENT_PATTERNS=”$(cat patterns.yml)” - Set patterns URL as environment variable:

-e PATTERNS_URL=https://yourserver/patterns.yml

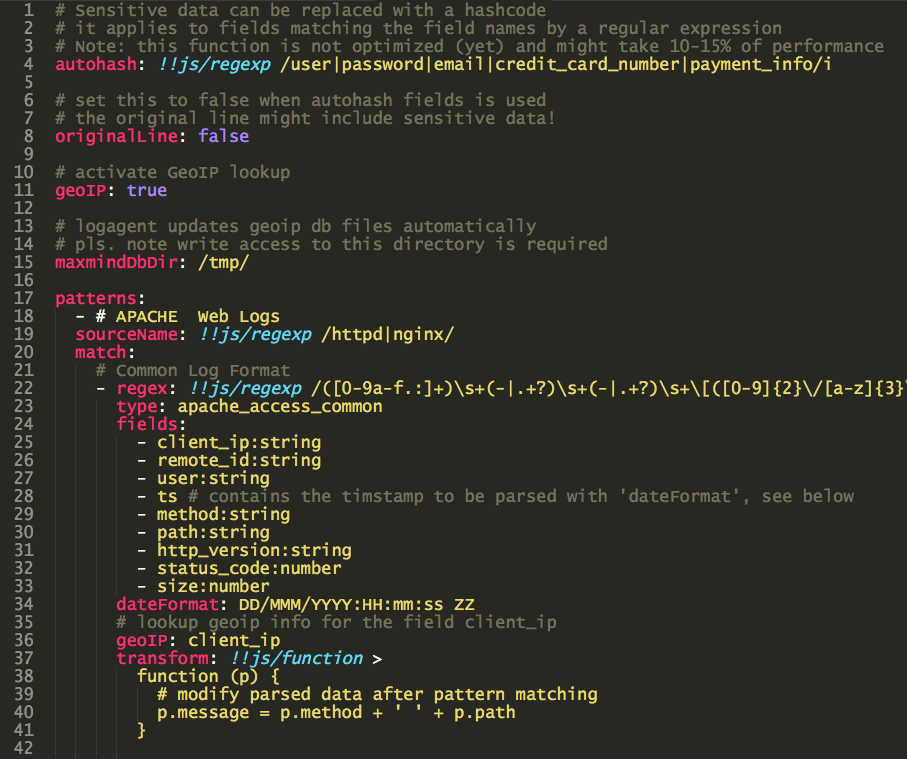

The file format for the patterns.yml file is based on JS-YAML, in short:

–indicates an array element!js/regexp– indicates a JavaScript regular expression!!js/function >– indicates a JavaScript function

The file has the following properties:

- patterns: list of patterns, each pattern starts with “-“

- match: a list of pattern definition for a specific log source (image/container)

- sourceName: a regular expression matching the name of the log source. The source name is a combination of image name and container name.

- regex: JS regular expression

- fields: field list of extracted match groups from the regex

- type: type used in Sematext Cloud (Elasticsearch Mapping)

- dateFormat: format of the special fields ‘ts’, if the date format matches, a new field @timestamp is generated

- transform: A JavaScript function to manipulate the result of regex and date parsing

The following example shows pattern definitions for web server logs, which is one of the patterns available by default:

This example shows a few very interesting features:

- Masking sensitive data with “autohash” property, listing fields to be replaced with a hash code

- Automatic Geo-IP lookups including automatic updates for Maxmind Geo-IP lite database

- Post-processing of parsed logs with JavaScript functions

The component for detecting and parsing log messages — logagent-js — is open source and contributions for even more log formats are welcome.

Log Routing¶

Routing logs from different containers to separate Sematext Cloud Logs Apps can be configured via Docker labels, or environment variables, e.g. on Kubernetes.

Log Routing with Env Vars¶

Tag a container with the label, or environment variable LOGS_TOKEN=YOUR_LOGS_TOKEN.

Logagent inspects the containers for this label and ships the logs to the specified Logs App.

The following container environment variables and labels are supported:

LOGS_ENABLED=<true|false>- switch log collection for the container on or off. Note, the default value is configurable in Logagent configuration via the settingLOGSENE_ENABLED_DEFAULT.LOGS_TOKEN=<YOUR_LOGS_TOKEN>- logs token for the containerLOGS_RECEIVER_URL=<URL>- set a single log destination. The URL should not include the token or index of an Elasticsearch API endpoint. E.g.https://logsene-receiver.sematext.com. Requires you to set theLOGS_TOKENenv var.LOGS_RECEIVER_URLS=<URL, URL, URL>- set multiple log destinations. The URL should include the token or index of an Elasticsearch API endpoint. E.g.https://logsene-receiver.sematext.com/<YOUR_LOGS_TOKEN>. Does not require setting theLOGS_TOKENenv var.

The following Kubernetes Pod annotations are equivalent:

sematext.com/logs-enabled=<true|false>sematext.com/logs-token=<YOUR_LOGS_TOKEN>sematext.com/logs-receiver-url=<URL>sematext.com/logs-receiver-urls=<URL, URL, URL>

Example: The following command will start Nginx webserver and logs for this container will be shipped to the related Logs App.

Or use environment variables for Kubernetes:

All other container logs will be shipped to the Logs App specified in the docker run command for sematext/logagent with the environment variable LOGS_TOKEN.

By default, all logs from all containers are collected and sent to Sematext Cloud. You can change this default by setting the LOGSENE_ENABLED_DEFAULT=false label for the Logagent container. This default can be overridden, on each container, through the LOGS_ENABLED label.

Please refer to Docker Log Management & Enrichment for further details.

Log Routing with Logagent Config File¶

Add the LA_CONFIG_OVERRIDE=true env var to your Logagent instance, alongside the LA_CONFIG=/etc/sematext/logagent.conf. Next, add a logagent.conf file as a config resource in the configs section of your Docker Compose/Stack file.

version: "3.7"

services:

st-logagent:

image: 'sematext/logagent:latest'

environment:

- LA_CONFIG=/etc/sematext/logagent.conf

- LA_CONFIG_OVERRIDE=true

# if you're using Docker Stack use configs,

# otherwise use volumes or secrets

configs:

- source: logagent_config

target: /etc/sematext/logagent.conf

deploy:

mode: global

restart_policy:

condition: on-failure

volumes:

- '/var/run/docker.sock:/var/run/docker.sock'

configs:

logagent_config:

file: ./logagent.yml

The logagent.yml looks like this:

options:

printStats: 60

suppress: true

geoipEnabled: true

diskBufferDir: /tmp/sematext-logagent

input:

docker:

module: docker-logs

socket: /var/run/docker.sock

labelFilter: com.docker.*,io.kubernetes.*,annotation.*

parser:

patternFiles:

- /etc/logagent/patterns.yml

outputFilter:

dockerEnrichment:

module: docker-enrichment

autodetectSeverity: true

backward_compatible_field_name:

module: !!js/function >

function (context, config, eventEmitter, data, callback) {

// debug: dump data to error stream

// console.error(data.labels)

if (data.labels && data.labels['com_docker_swarm_service_name']) {

data.swarm_service_name = data.labels ['com_docker_swarm_service_name']

// data.swarm_service_id = data.labels ['com_docker_swarm_service_id']

// data.swarm_task_name = data.labels ['com_docker_swarm_task_name']

}

callback(null, data)

}

output:

sematext-logs1:

module: elasticsearch

url: https://logsene-receiver.sematext.com

index: LOGS_TOKEN_1

sematext-logs2:

module: elasticsearch

url: https://logsene-receiver.sematext.com

index: LOGS_TOKEN_2

elasticsearch:

module: elasticsearch

url: http://local-es:9200

index: app

In the output section you can configure as many outputs as you want.