Making Node.js applications quick and sturdy is a tricky task to get right. Nailing the performance just right with the V8 engine Node.js is built on is not at all as simple as one would think. JavaScript is a dynamically typed language, where you let the interpreter assign types to variables. If you’re not careful this can lead to memory leaks. Node.js is at its essence a JavaScript runtime with limits regarding memory utilization and CPU thread usage. It does have garbage collection, which is tightly coupled with both process memory and CPU usage.

There are various metrics to explore and track, but which are important? This article will discuss the key metrics that are vital in analyzing your Node.js server’s performance.

Collect key Node.js metrics and get out of the box graphs and custom dashboards. Get Started

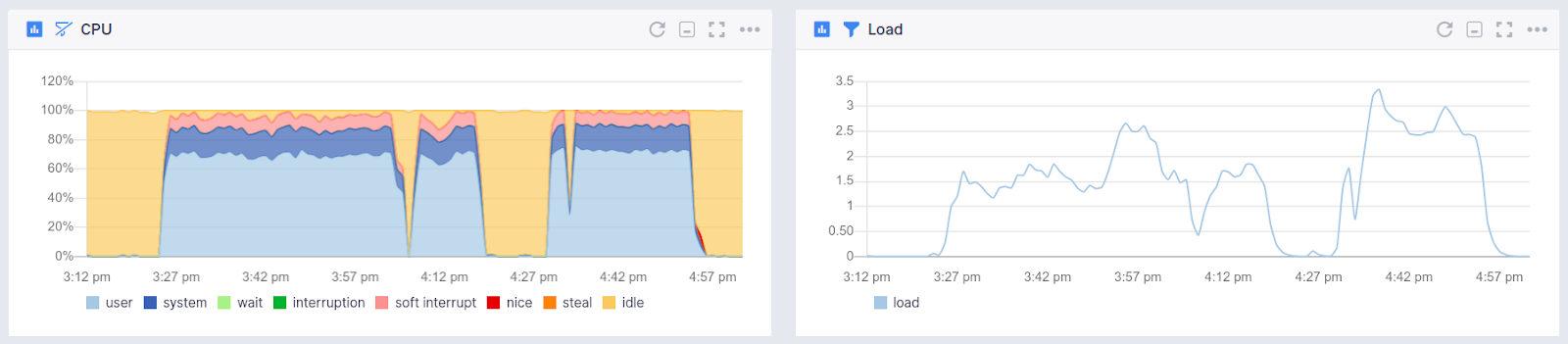

CPU Usage Metrics for Node.js

I mentioned above that the Node.js runtime has limits regarding CPU thread utilization. The reason behind this is the runtime’s single thread of execution, meaning it’s bound to a single core of a CPU. One instance of a Node.js application can only use one CPU core.

However, having this in mind, Node.js applications rarely consume high amounts of CPU time. Instead, they rely on non-blocking I/O. The CPU does not have to wait for I/O requests, handling them asynchronously instead. If you are facing high CPU utilization, it may mean a lot of synchronous work is hogging the CPU and blocking the thread. This is bad! By blocking the thread it also blocks asynchronous processes.

Most of the time you don’t need to worry about CPU loads. They’re rarely a deal-breaker. What you can do to lower CPU usage is to create child processes or forks to handle CPU intensive tasks. An example would be that you have a web server that handles incoming requests. To avoid blocking this thread, you can spawn a child process to handle a CPU intensive task. Pretty cool.

Fixing CPU intensive code is the first step to increase the performance and stability of your Node.js server. The metrics to watch out for are:

- CPU Usage

- CPU Load

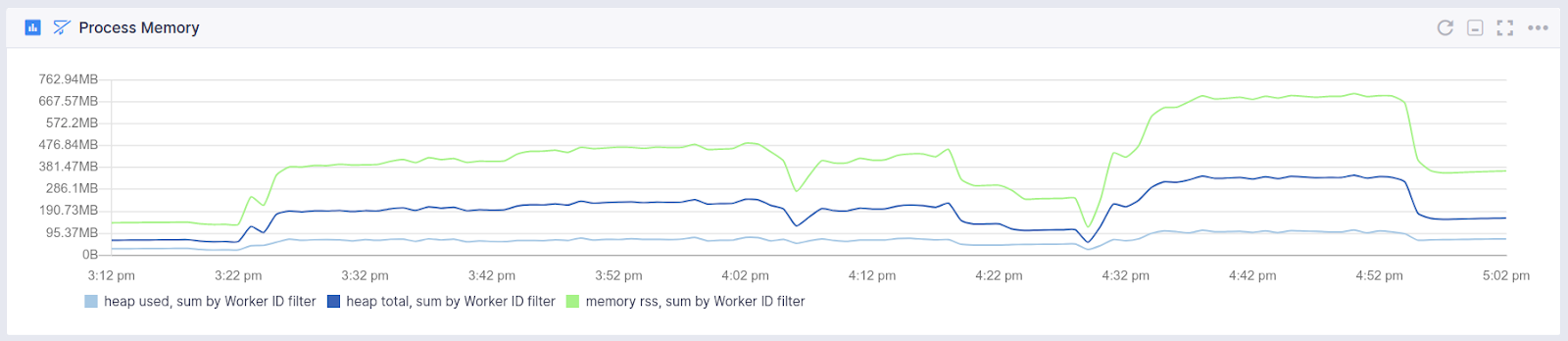

Memory Usage and Leaks Metrics for Node.js

To understand memory usage and potential leaks, you first need to understand what the heap and stack are. Values can be stored in either the stack or the heap. The stack can be visually represented like a stack of books, where the books are actually functions and their context getting stored in the memory. The heap is a larger region that stores everything that is allocated dynamically.

With that out of the way, there’s one key thing about Node.js process memory you must know. A single process can have a maximum heap of 1.5 GB. You guessed it! Memory leaks are a common issue in Node.js. They happen when objects are referenced for too long, meaning values are stored even though they’re not needed. Because Node.js is based on the V8 engine, it uses garbage collection to reclaim memory used by variables that are no longer needed. This process of reclaiming memory stops the program execution. We’ll mention garbage collection in more detail a bit further down in the next section.

Noticing memory leaks is easier than you might think. If your process memory keeps growing steadily, while not periodically being reduced by garbage collection, you most likely have a memory leak. Ideally, you’d want to focus on preventing memory leaks rather than troubleshooting and debugging them. If you come across a memory leak in your application, it’s horribly difficult to track down the root cause. The metrics you need to watch out for are:

- Released memory between Garbage Collection Cycles

- Process Heap Size

- Process Heap Usage

But I did write a dedicated article about Node.js memory leak detection should you be interested in that.

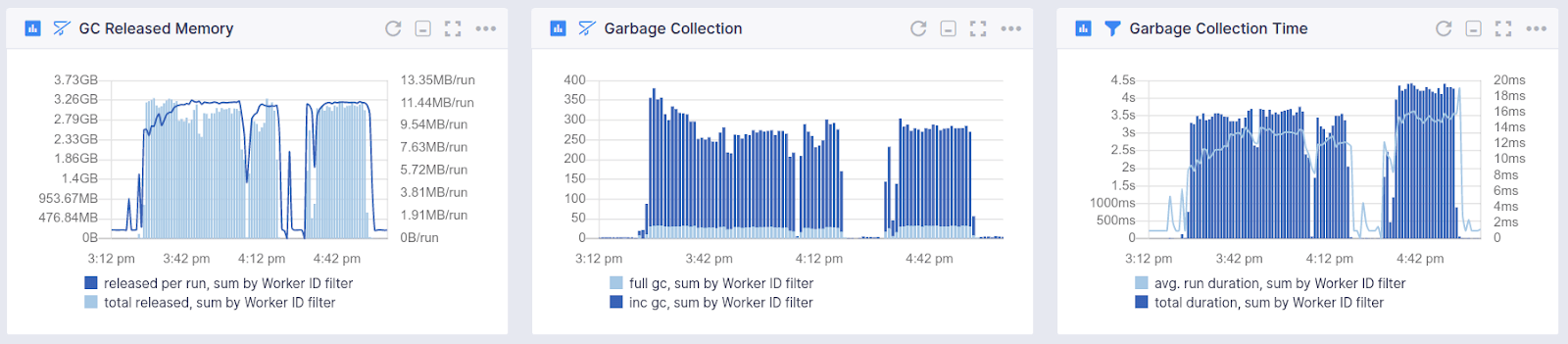

Garbage Collection Metrics for Node.js

In the V8 runtime, garbage collection stops the program execution. There are two types of garbage collection. One is called scavenging and makes use of incremental garbage collection cycles to process only a part of the heap at a time. This is very quick in comparison to full garbage collection cycles, which reclaim memory from objects and variables that survived multiple incremental garbage collection cycles. Because full garbage collection cycles pause program execution, they are executed less frequently.

By measuring how often a full, or incremental, garbage collection cycle is executed you can see how it impacts the time it takes to reclaim memory and how much memory was released. Comparing the released memory with the size of the heap can show you if there is a growing trend leading you to figure out if you have a memory leak.

Because of everything mentioned above, you should monitor the following Node.js garbage collection metrics:

- Time consumed for garbage collection

- Counters for full garbage collection cycles

- Counters for incremental garbage collection cycles

- Released memory after garbage collection

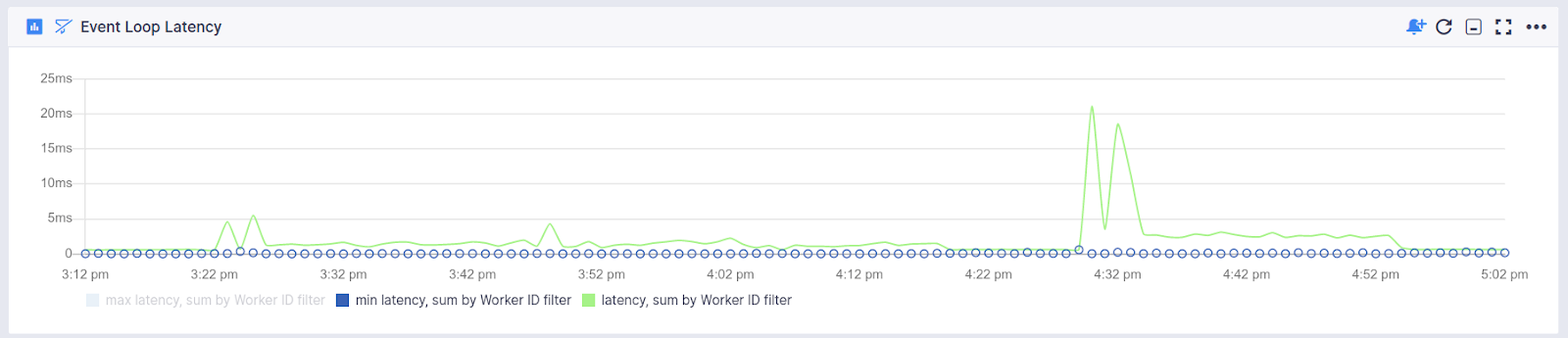

Node.js Event Loop Metrics

Node.js is inherently fast because it can process events asynchronously. What makes it possible is the event loop. It’s a special place reserved for processing asynchronous functions that are called as responses to certain events, and are executed outside of the main thread. Such functions are also called callback functions.

Node.js has the ability to be CPU bound and use asynchronous operations not to waste CPU cycles while waiting for I/O operations. A server can handle a huge amount of connections and not be blocked for I/O operations. This is called non-blocking I/O, a famous term. However, the event loop can slow down and will ultimately cause every subsequent event to take longer to process, causing something called event loop lag.

Common causes of event loop lag are long-running synchronous processes and an incremental increase in tasks per loop.

Long-running synchronous processes

Be mindful of how you handle synchronous execution in your application. All other operations need to wait to be executed. Hence the famous rule for Node.js performance. Don’t block the event loop! You can’t avoid CPU bound work your server does but you can be smart about how to execute asynchronous vs. synchronous tasks. As mentioned above, use forks or child processes for synchronous tasks.

Incremental increase in tasks per loop

As your application scales, you will see an increase in load and number of tasks per loop. Node.js keeps track of all asynchronous functions that need to be handled by the event loop. The lag that occurs with the increase of tasks will cause an increase in response times when the count gets too high.

The good news is that you can alleviate this by increasing the number of processes running your application. By using the cluster module, you can utilize all the CPU cores of your server. Of course, you can also use PM2 to spawn worker processes. More about this in the next section.

If you want a more detailed explanation of the event loop, check out this talk by Philip Roberts from JSConf EU.

That’s why you need to monitor these metrics:

- Slowest Event Handling (Max Latency)

- Fastest Event Handling (Min Latency)

- Average Event Loop Latency

Node.js Cluster-Mode and Forking Worker Processes

So far, I’ve mentioned the single-threaded nature of Node.js several times, as well as the memory cap of a single process and how blocking the thread is something to avoid by all measures. Scaling Node.js beyond this is done with the cluster module.

By using the cluster module you can create a master process that shares sockets with forked worker processes. These processes can exchange messages. Here’s the kicker. All the forked worker processes have their own process ID and can run on a dedicated CPU core. A typical use case for web servers is forking worker processes, which operate on a shared server socket and handle the requests in round-robin fashion.

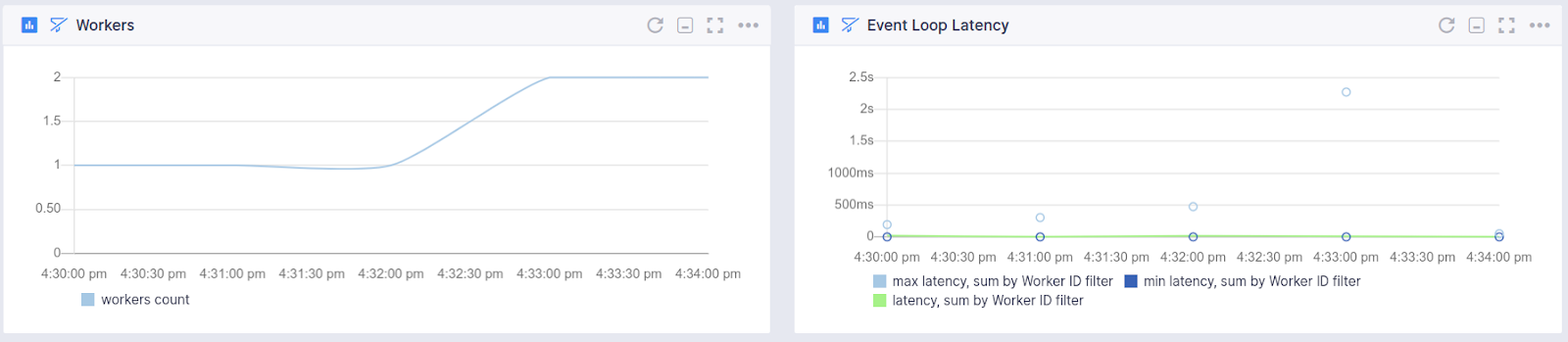

Checking the number of worker processes that both include the processes spawned by the cluster module and child processes spawned by running synchronous tasks away from the main thread can be important metrics to know. If they get terminated for some reason, it’s important for you to make sure to get them running again. Having this feature in a monitoring tool can be a big advantage. Read more about choosing the right monitoring tools for your needs from our alerting and monitoring guide.

Metrics to watch here are:

- Worker count

- Event loop latency per worker

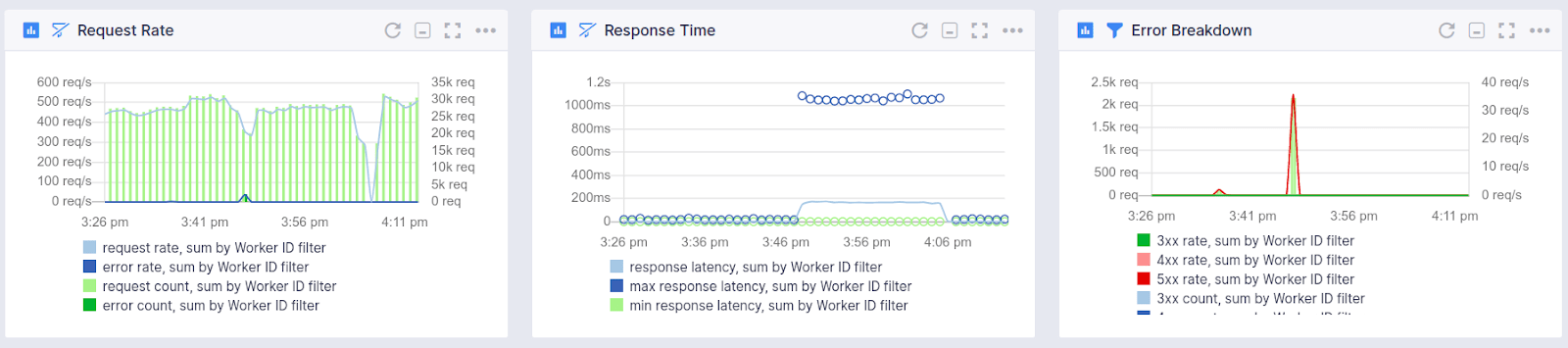

Node.js HTTP Request/Response Latency

Keeping an eye on user-facing latencies is the most crucial step in monitoring any API. The HTTP requests hitting your server, and the responses coming back to your users in a timely manner is what will keep your customers coming back. Monitoring API routes of popular frameworks, like Express, Koa, and Hapi, is a must.

When monitoring HTTP request and response metrics you have to take into account 4 key values:

- Response time

- Request rate

- Error rates

- Request/Response content size

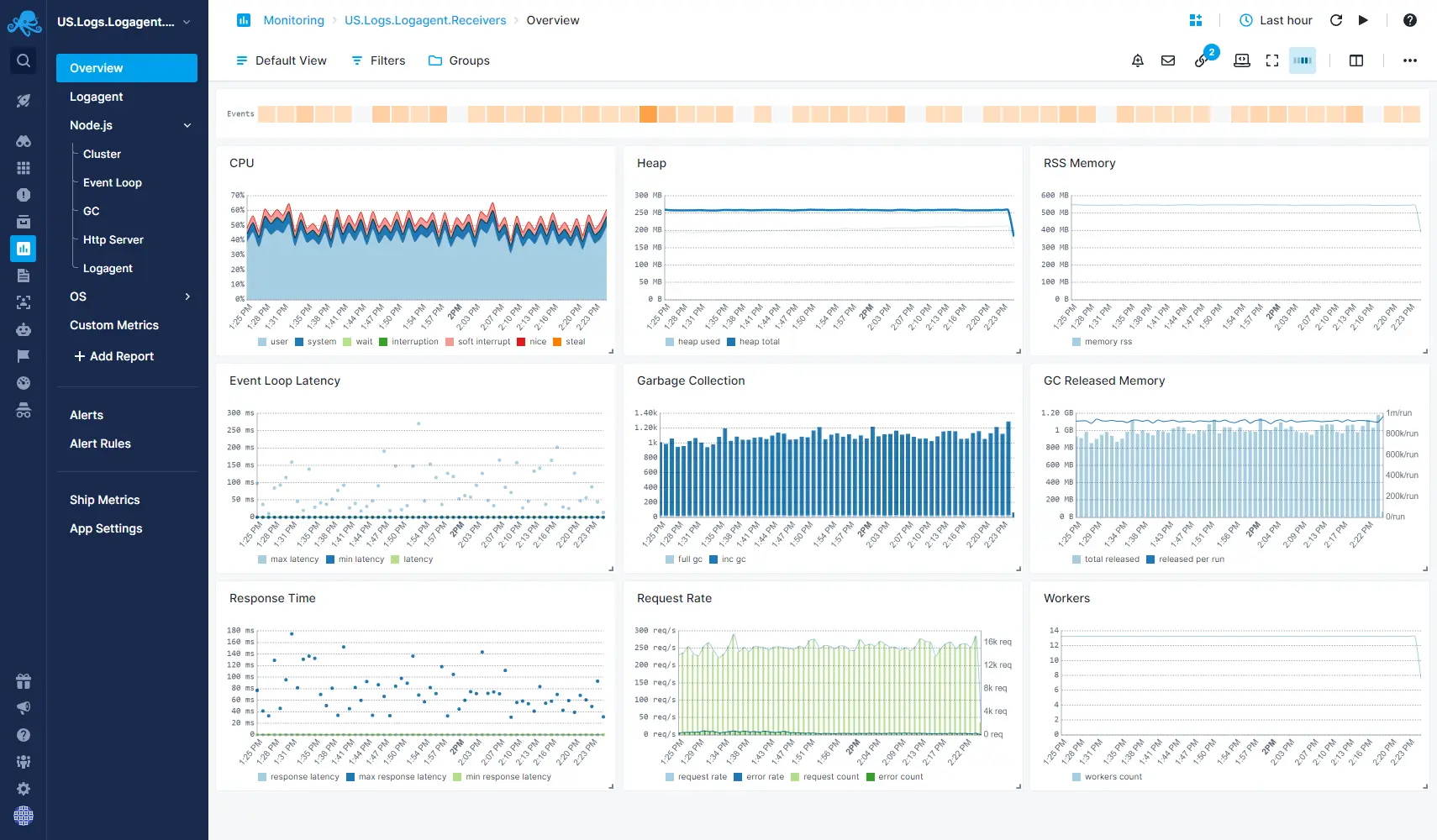

Node.js Monitoring Dashboard and Integrations

Your Node.js application will never run by itself without supporting services like Nginx for proxying, Redis for caching, Elasticsearch for indexing and full-text search, or persistent storage like MongoDB or PostgreSQL. Integrations with these services with Sematext is just as simple as adding Node.js metrics. When choosing a monitoring solution make sure you can create dashboards with all these metrics in one place. Having a way to show you an overview of all services and their health is crucial.

Seeing metrics for all the systems that surround your Node.js application is precious. Here is just a small example of a Node.js monitoring dashboard combining Node.js, Nginx, and Elasticsearch metrics.

Wrapping up Node.js Key Metrics

Monitoring the health and performance of your Node.js applications can be hard to get right. Node.js key metrics are tightly coupled. Garbage collection cycles cause changes in process memory and CPU usage. Keeping an eye on these Node.js metrics is crucial for keeping your application up and healthy while serving your users with minimal latency.

These are my top Node.js key metrics to monitor. Feel free to let me know in the comments below what you think is crucial.

If you need an observability solution for your software stack, check out Sematext Cloud. We’re pushing to open source our products and make an impact. If you’d like to try us out and monitor your Node.js applications, sign up to get a 30-day pro trial, or choose the free tier right away.