Monitoring Kafka is a tricky task. As you can see in the first chapter, Kafka key metrics to monitor, the setup, tuning, and operations of Kafka require deep insights into performance metrics such as consumer lag, I/O utilization, garbage collection and many more. Sematext provides an excellent alternative to other Kafka monitoring tools because it’s quick and simple to use. It also hosts all your infrastructure and application metrics under one roof, together with logs and other data available for team-sharing.

How Sematext Helps Save Time, Work, & Costs

Here are a few things you will NOT have to do when using Sematext for Kafka monitoring:

- figure out which metrics to collect and which ones to ignore

- give metrics meaningful labels

- hunt for metric descriptions in the docs to know what each one actually shows

- build charts to group metrics that you really want on the same charts, not several separate charts

- figure out which aggregation to use for each set of metrics, like min, max, avg, or something else

- build dashboards to combine charts with metrics you typically want to see together

- set up basic alert rules

All of the above is not even a complete story. Do you want to collect Kafka logs? How about structuring them? Sematext does all this automatically for you!

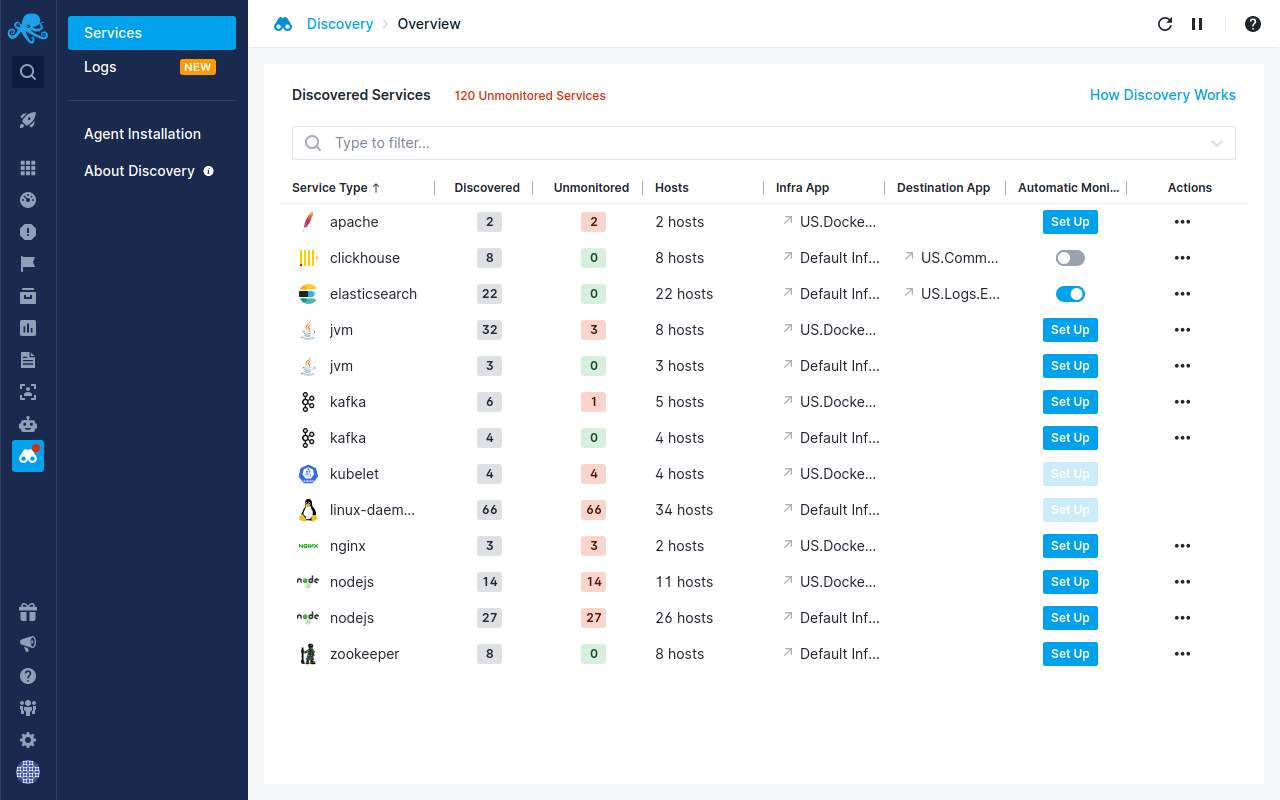

Sematext’s auto-discovery of services lets you automatically start monitoring your Kafka services and logs directly through the user interface.

In this chapter, we’ll look at how Sematext provides more comprehensive, and easy to set up, monitoring for Kafka and other technologies in your infrastructure. By combining events, logs, and metrics together in one integrated full-stack observability platform and using the Sematext open-source monitoring agent and its open-source integrations you can monitor your whole infrastructure and apps, not just your Kafka. You can see how it compares to similar IT solutions in our article about the best infrastructure monitoring software.

You can also get deeper visibility into your entire software stack by collecting, processing, and analyzing your logs.

Apache Kafka Monitoring

Collecting Apache Kafka Metrics

Sematext Kafka integration collects 168 different Kafka metrics for JVM, consumer lag, controllers, replicas, requests and many more. Sematext maintains and supports the official Kafka monitoring integration. Another cool fact about the Sematext Kafka integration is that it’s customizable and open source. Bottom line, you don’t need to deal with configuring the agent for metrics collection, which is a huge time saver!

Installing the Monitoring Agent

Setting up the monitoring agent takes less than 5 minutes:

- Create a Kafka App in the Integrations / Overview (note that we have instances of Sematext Cloud running in data centers in both the US and Europe). This will let you install the agent and control access to your monitoring and logs data. The short What is an App in Sematext Cloud guide has more details.

- Name your Kafka monitoring App and, if you want to collect Kafka logs as well, create a Logs App along the way.

- Install the Sematext Agent according to the setup instructions displayed in the UI.

For example, on Ubuntu, add Sematext Linux packages with the following command:

echo "deb http://pub-repo.sematext.com/ubuntu sematext main" | sudo tee /etc/apt/sources.list.d/sematext.list > /dev/null wget -O - https://pub-repo.sematext.com/ubuntu/sematext.gpg.key | sudo apt-key add - sudo apt-get update sudo apt-get install spm-client

Then setup Kafka monitoring on each of the Kafka components – Broker, Producers, and Consumers.

Setting up Kafka monitoring on Kafka Broker is as easy as running the following command:

sudo bash /opt/spm/bin/setup-sematext --monitoring-token <your-monitoring-token-goes-here> --app-type kafka --app-subtype kafka-broker --agent-type javaagent --infra-token <your-infra-monitoring-token-goes-here>

After preparing Kafka Broker with the above command you need to add the following line to the KAFKA_JMX_OPTS in the $KAFKA_HOME/bin/kafka-server-start.sh. If you don’t have the KAFKA_JMX_OPTS you need to create it:

-Dcom.sun.management.jmxremote -javaagent:/opt/spm/spm-monitor/lib/spm-monitor-generic.jar=<your-monitoring-token-goes-here>:kafka-broker:default

Setting up Kafka monitoring on each Kafka Producer is just as easy as setting it up on the Broker. You need to run the following command (please keep in mind that Sematext Cloud supports Java and Scala-based Producers at the moment):

sudo bash /opt/spm/bin/setup-sematext --monitoring-token <your-monitoring-token-goes-here> --app-type kafka --app-subtype kafka-producer --agent-type javaagent --infra-token <your-infra-monitoring-token-goes-here>

After preparing each Kafka Producer with the above command you need to add the following line to the Kafka Producer startup parameters:

-Dcom.sun.management.jmxremote -javaagent:/opt/spm/spm-monitor/lib/spm-monitor-generic.jar=<your-monitoring-token-goes-here>:kafka-producer:default

Finally, monitoring on each Kafka Consumer needs to be configured by running the following command:

sudo bash /opt/spm/bin/setup-sematext --monitoring-token <your-monitoring-token-goes-here> --app-type kafka --app-subtype kafka-consumer --agent-type javaagent --infra-token <your-infra-monitoring-token-goes-here>

After preparing each Kafka Consumer with the above command you need to add the following line to the Kafka Consumer startup parameters:

-Dcom.sun.management.jmxremote -javaagent:/opt/spm/spm-monitor/lib/spm-monitor-generic.jar=<your-monitoring-token-goes-here>:kafka-consumer:default

Please remember to restart each Broker, Consumer, and Producer for these changes to take place. Go grab a drink, but hurry, Kafka metrics will start appearing in your charts within a minute!

Kafka Monitoring Dashboard

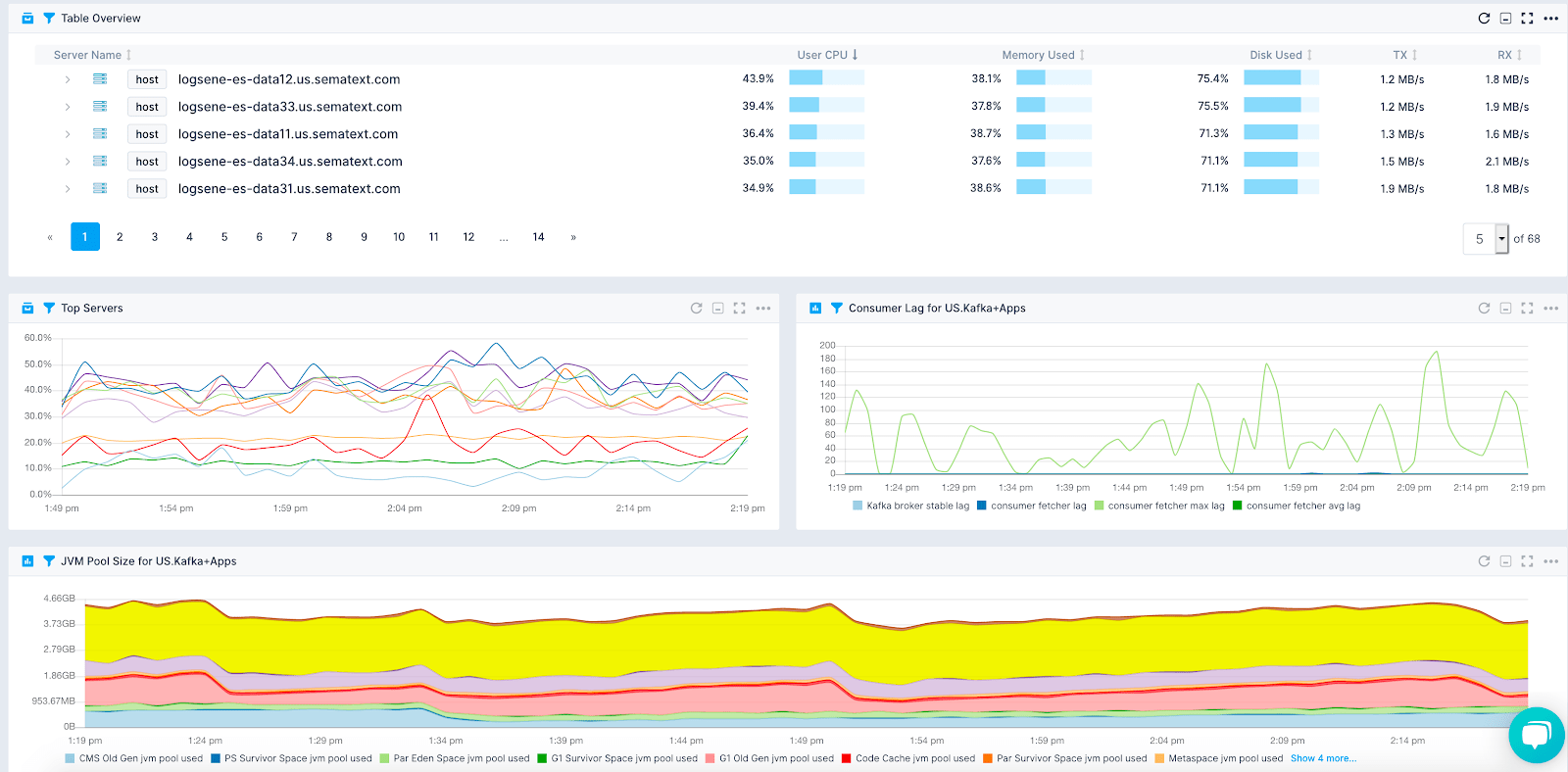

When you open the Kafka App you’ll find a predefined set of dashboards that organize more than 70 Kafka metrics and general server metrics in predefined charts grouped into an intuitively organized set of monitoring dashboards.

- Overview with charts for all key Kafka metrics

- Operating System metrics such as CPU, memory, network, disk usage, etc.

- Kafka metrics:

- Consumer Lag – consumer lag, static broker lag

- Partitions – broker partitions, leader partitions, offline partitions, under replicated partitions

- Leader Elections – broker leader elections, broker unclean leader elections, broker leader elections time

- Active Controllers – broker active controllers by a host

- ISR/Log Flush – broker ISR shrinks and expands, broker log flushes, broker log flush times

- Log Cleaner – broker log cleaner buffer utilization, broker log cleaner working time, broker log cleaner recopy

- Queues/Expiries – broker response queue, broker request queue

- Replicas – broker replica max lag, broker replica minimum fetch, broker preferred replicas imbalances

- Requests – broker requests local time, broker requests remote time, broker requests queue time, broker response queue time, broker response send time, number of broker requests

- Topic – broker topic messages in, broker topic in/out, broker topic rejected, broker topic failed fetch/produce requests

- Topic Partition – broker log segment, broker log size, broker log offset increasing, broker partitions under-replicated

- Producer Batch/Buffer – producer batch size, producer max batch size, compression rate, producer buffer available bytes, producer buffer pool wait ratio, producer buffer total bytes

- Producer Communication – producer I/O time, producer I/O ratio, producer I/O wait time, producer I/O wait ratio, producer connection count, producer connection creation rate, producer connection close rate, producer network I/O rate

- Producer Req/Rec – producer records queue time, producer records send rate, producer records retry rate, producer records error rate, producer records per request, record size, producer requests in flight, producer waiting threads

- Producer Node – producer node in bytes rate, producer node out bytes rate, producer node request latency, producer node request max latency, producer node requests rate, producer node responses rate, producer requests size, producer requests maximum size

- Producer Topic – producer topic compression rate, producer topic bytes rate, producer records send rate, producer records retries rate, producer records errors rate

- Consumer Fetcher – consumer responses, consumer fetcher bytes, consumer responses bytes

- Consumer Communication – consumer I/O time, consumer I/O ratio, consumer I/O wait time, consumer I/O wait ratio, consumer connection count, consumer connection creation rate, consumer connection close rate, consumer network I/O rate

- Consumer Fetcher – records consumed rate, consumer records per request, consumer fetch latency, consumer fetch rate, bytes consumed rate, consumer fetch average size, consumer throttle maximum time

- Consumer Coordinator – consumer assigned partitions, consumer heartbeat response maximum time, consumer heartbeat rate, consumer join time, consumer join max time, consumer sync time, consumer max sync time, consumer join rate, consumer syncs rate

- Consumer Node – consumer node bytes rate, consumer node out bytes rate, consumer node request latency, consumer node request max latency, consumer node requests rate, consumer node responses rate, consumer node request size, consumer node request max size

Kafka key metrics in Sematext Cloud

Set up Kafka Alerts

In order to save you time, Sematext automatically creates a set of default alert rules such as alerts for low disk space. You can create additional alerts on any metric. Check out the Alerts & Events Guide or watch Alerts in Sematext Cloud for more details about creating custom alerts.

Alerting on Kafka Metrics

There are 3 types of alerts in Sematext:

- Heartbeat alerts, which notify you when a Kafka Broker is down

- Classic threshold-based alerts that notify you when a metric value crosses a predefined threshold

- Alerts based on statistical anomaly detection that notify you when metric values suddenly change and deviate from the baseline

Let me show you how to create an alert rule for Kafka metrics. The Consumer Lag chart you see below shows a spike. Our baseline is a low Consumer Lag value close to 0, but there’s a spike where it jumped to over 100. To create an alert rule on a metric we’d go to the pulldown in the top right corner of a chart and choose “Create alert”. The alert rule inherits the selected filters. You can choose various notification options such as email or configured notification hooks like PagerDuty, Slack, VictorOps, BigPanda, OpsGenie, Pusher, and generic webhooks among many others. Alerts are triggered either by anomaly detection, watching metric changes in a given time window or through the use of classic threshold-based alerts.

Alert creation for Kafka Consumer Fetcher Lag

Kafka Logs

Shipping Kafka Logs

Since having logs and metrics in one platform makes troubleshooting simpler and faster let’s ship Kafka logs, too. You can choose from a number of log shippers, but we’ll use Logagent because it’s lightweight, easy to set up, and it can parse and structure logs out of the box. The log parser extracts timestamp, severity, and messages. For query traces, the log parser also extracts the unique query ID to group logs related to query execution. Let’s set it up and start shipping logs from Kafka!

- Create a Logs App to obtain an App token

- Install the Logagent npm package

sudo npm i -g @sematext/logagent

If you don’t have Node.js, you can install it easily. E.g. On Debian/Ubuntu:

curl -sL https://deb.nodesource.com/setup_10.x | sudo -E bash - sudo apt-get install -y nodejs

Install the Logagent service by specifying the logs token and the path to Kafka’s log files. You can use -g var/log/kafka/server.log to ship logs only from the Kafka server or -g var/log/kafka/*.log to ship all Kafka related logs. If you run other services, such as ZooKeeper or Elasticsearch on the same server consider shipping all logs using -g var/log/**/*.log. The default setting will ship all logs from /var/log/**/*.log when the -g parameter is not specified.

Logagent detects the init system and installs Systemd or Upstart service scripts. On Mac OS X it creates a launchd service. Simply run:

sudo logagent-setup -i YOUR_LOGS_TOKEN -g ‘var/log/kafka/*.log` #for EU region: #sudo logagent-setup -i LOGS_TOKEN #-u logsene-receiver.eu.sematext.com #-g ‘var/log/kafka/*.log`

The setup script generates the configuration file in /etc/sematext/logagent.conf and starts Logagent as system service. If you run Kafka in containers, check out how to set up Logagent for container logs.

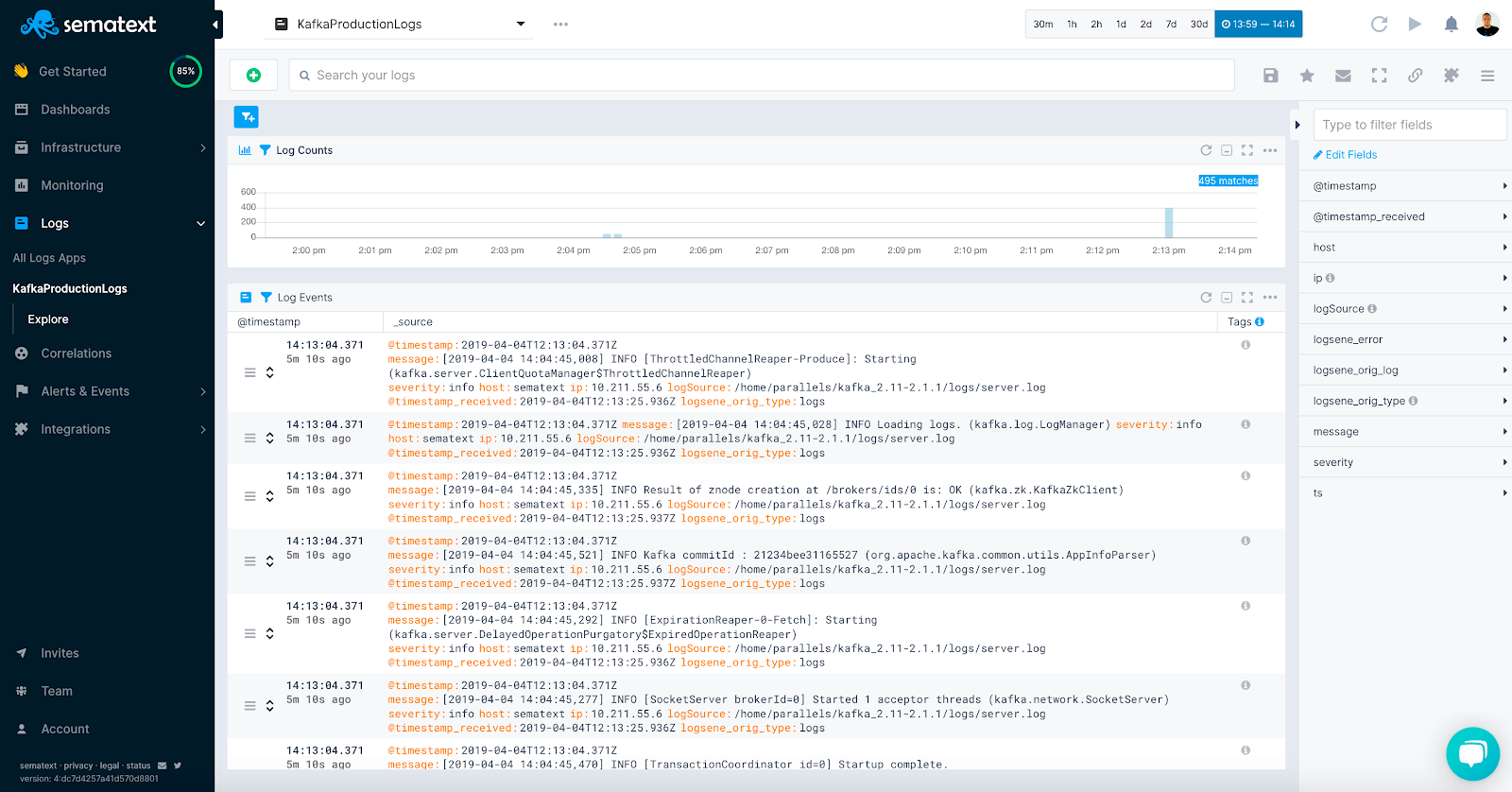

Log Search and Dashboards

Once your logs are stored in Sematext you can search and filter them when troubleshooting, save queries you run frequently, create alerts based on these queries, and create your individual logs dashboard.

Search for Kafka Logs

Log Search Syntax

If you know how to search with Google, you’ll know how to search your logs in Sematext Cloud.

- Use AND, OR, NOT operators – e.g. (error OR warn) NOT exception

- Group your AND, OR, NOT clauses – e.g. message:(exception OR error OR timeout) AND severity:(error OR warn)

- Don’t like Booleans? Use + and – to include and exclude – e.g. +message:error -message:timeout -host:db1.example.com

- Use explicitly field references – e.g. message:timeout

- Need a phrase search? Use quotation marks – e.g. message:”fatal error”

When digging through logs you might find yourself running the same searches over and over again. To solve this annoyance, Sematext lets you save queries so you can execute them quickly without having to retype them. Check out the using logs for troubleshooting guide and how it makes your life easier.

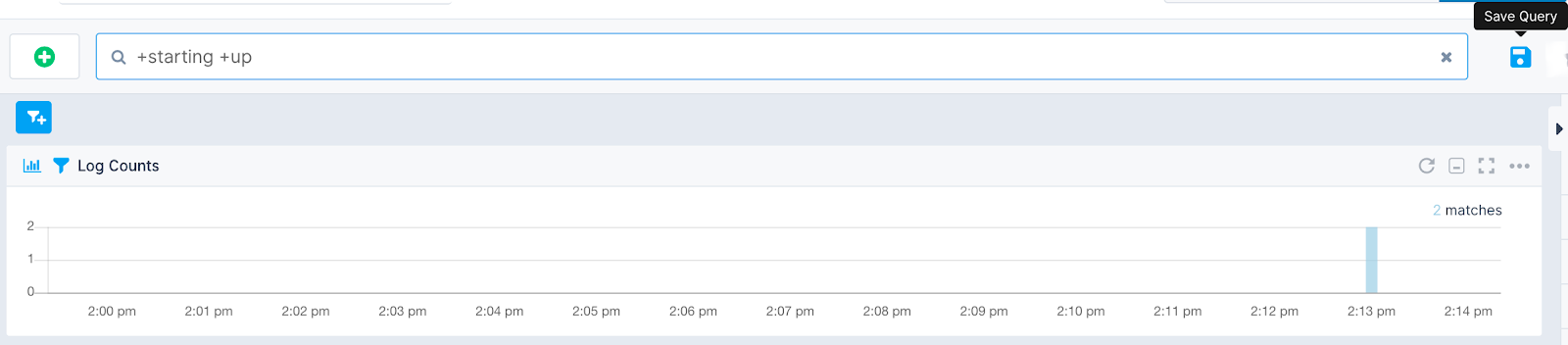

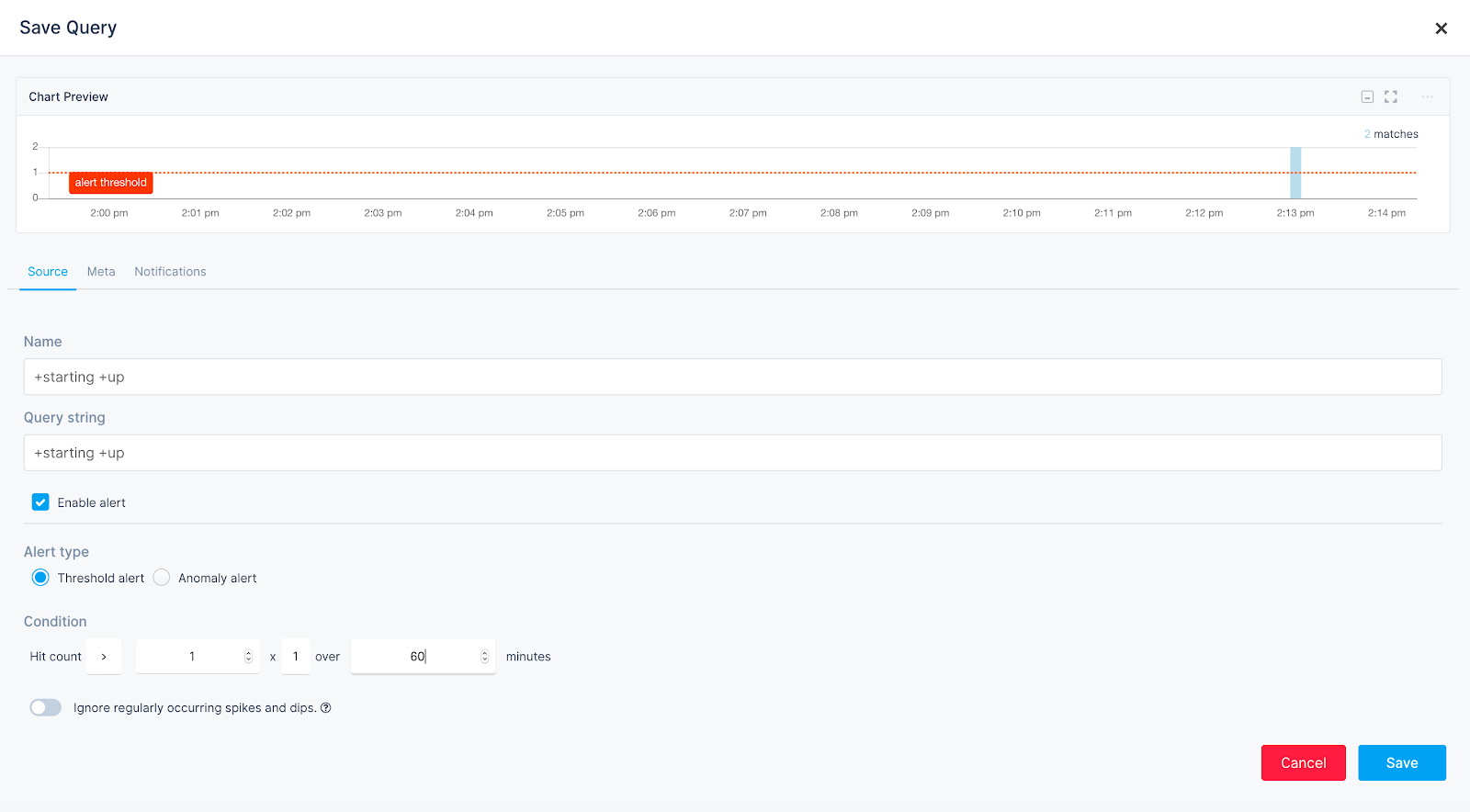

Alerting on Kafka Logs

To create a Logs alert start by running a query that matches the log events we want to be alerted about. Write the query in the search box and click on the antiquated floppy disk icon.

Similar to the setup of metric alert rules, we can define threshold-based or anomaly detection alerts based on the number of matching log events the alert query returns.

You can check out a short video to learn more about Alerts in Sematext Cloud, or jump over to our Alerts guide in the docs to read more about creating alerts for logs and metrics.

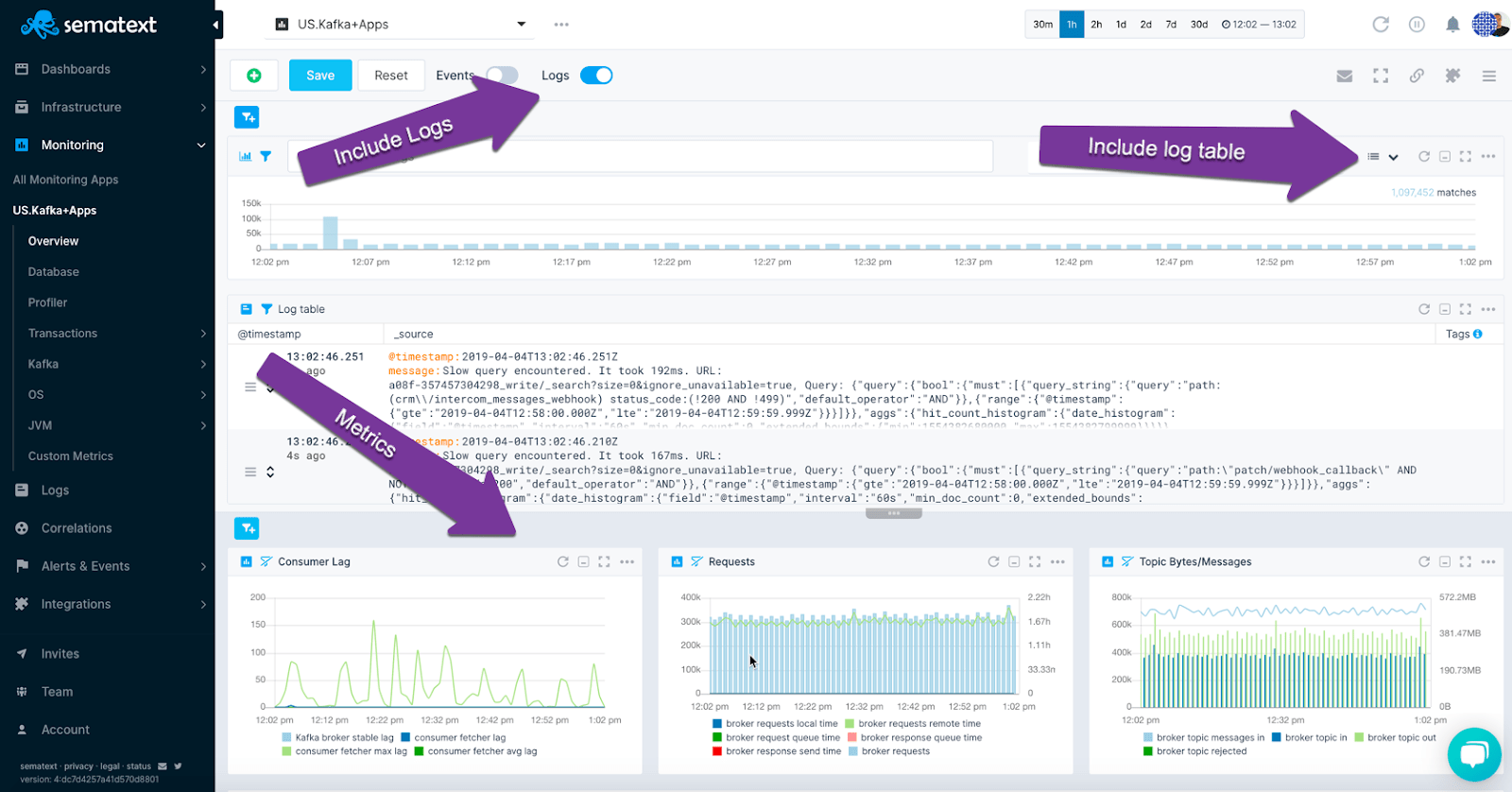

Kafka Metrics and Log Correlation

A typical troubleshooting workflow starts from detecting a spike in the metrics, then digging into logs to find the root cause of the problem. Sematext makes this really simple and fast. Your metrics and logs live under the same roof. Logs are centralized, the search is fast, and the powerful log search syntax is simple to use. Correlation of metrics and logs is literally one click away.

Kafka logs and metrics in a single view

Full Stack Observability for Kafka & Friends

Kafka’s best friend is Apache ZooKeeper. Kafka requires ZooKeeper to operate, handle partitions and perform leader elections. Monitoring ZooKeeper and Kafka together is crucial in order to correlate metrics from both and have full observability of your Kafka operations. Kafka also likes every piece of your environment that sends data to it or reads data from it. Sematext Cloud allows you to easily monitor your Kafka producers and Kafka consumers along with related applications and infrastructure, giving you insight not only about Kafka, but your whole system.

Monitor Kafka with Sematext

Monitoring Kafka involves identifying key metrics for collecting metrics and logs while connecting everything in a meaningful way. In this chapter, we’ve shown you how to monitor Kafka metrics and logs in one place. We used out-of-the-box and customized dashboards, metrics correlation, log correlation, anomaly detection, and alerts. With other open-source integrations, like Elasticsearch or ZooKeeper, you can easily start monitoring Kafka alongside metrics, logs, and distributed request traces from all technologies in your infrastructure. Having deeper visibility into Kafka with Sematext is pretty cool, and saves a ton of time. You can check it out today for yourself with a free trial. There are some sweet free tiers as well, and nice deals if you’re a startup!