Definition: What Is Log Management

Log management is the process of handling log events generated by all software applications and infrastructure on which they run. It involves log collection, aggregation, parsing, storage, analysis, search, archiving, and disposal, with the ultimate goal of using the data for troubleshooting and gaining business insights, while also ensuring the compliance and security of applications and infrastructure.

Logs are typically recorded in one or more log files. Log management allows you to gather the data in one place and look at it as part of a whole instead of separate entities. As such, you can analyze the collected log data, identify issues and patterns so that you can paint a clear and visual picture of how all your systems perform at any given moment.

Logging has become an integral part of any DevOps team. Log management solutions vary in use, from using the popular open source ELK stack, typically deployed on your own infrastructure, to using fully managed log management solutions, such as Sematext Cloud.

Popular Log Management Topics

[guides_card post_id=”47486″]

[guides_card post_id=”47536″]

Log Management Basics: What Is a Log File?

A log file is a text file where applications, including the operating system, write events. Logs show you what happened behind the scenes and when it happened so that if something should go wrong with your systems you have a detailed record of every action prior to the anomaly.

Therefore, log files make it easier for developers, DevOps, SysAdmins, or SecOps to get insights and identify the root cause of issues with applications and infrastructure.

Want to avoid the hassle of managing logs on your own servers?

Ship logs to Sematext Logs and see all logs and metrics under one roof to make your job easier and troubleshooting a breeze.

Start your 14-day free trial See our plans

No credit card required – Get started in seconds

Logs are also useful when systems behave normally. You can get insights into how your application reacts and performs, in order to improve it.

There are many different sources of logs, as well as log types. Here are some of the top log sources we see today, starting from the bottom of the stack.

Network Gear

As we interact with mobile apps, web apps, websites, etc., we generate a lot of network traffic. The network gear – network routers, network switches and so on – can generate logs about this traffic. Unlike server and application logs that tend to use more modern formats and increasingly more structured logs, the network gear still uses various kinds of Syslog.

Server and Application Logs

Traditional sources of log events are servers and applications running on those servers. The kernel emits log messages such as which drivers it loads, if the OOM killer was invoked, and so on. Then there are system services like when a user logged in. This information helps you diagnose stability and security issues, as well as system-level performance bottlenecks. Is the kernel sending SYN cookies? It could be an attack or the network may be overloaded.

As for applications, you may have Nginx logs, a Java web application running in an Apache Tomcat or a PHP application running in Apache web server. They will emit various informative, error, or debug log events.

Some of these logs use standardized formats, like Common Log Format, while others use various custom formats, including various structured logging formats, like key=value or even JSON logs.

If you write your own application, we strongly suggest a structured logging format. It’s much easier to parse down the pipeline.

Here are a few logging guides that will help you learn how you can work with application logs:

Container Logs

Nowadays more and more applications are deployed in containers. As such, containers and applications running inside them are another big source of logs. Unlike traditional apps and servers, and certainly network gear, containers are very “promiscuous”. Container orchestration frameworks like Kubernetes move containers from host to host, adapting to demand and resource availability. An average container’s lifespan is shorter than that of a firefly or a bee.

On top of that the practice to “ssh in”, poke around, tail, and grep the logs to troubleshoot was deemed a bad practice in the cloud-native world. Hence various Docker monitoring and log management challenges require new approaches and new Docker log management tools.

If you want to learn more about container logs, check out our tutorials on:

Mobile Devices and App Logs

Mobile apps and devices are ubiquitous. You may not think of them as sources of logs because you can’t (easily) access system or application logs on an iOS or Android device. Limited disk space and unreliable network mean you can’t log verbose messages locally, and you can’t assume you’ll ship logs to a central location in real time.

In spite of those challenges, it’s important to know if a mobile app crashes and why. Beyond this, how the app behaves and performs. Typically, you’d buffer up to N messages locally, and ship them to a centralized logging service. This is what Sematext Cloud libraries for Android and iOS do.

Sensors, IoT, Industrial IoT

In the consumer space we have sensors in cars, smart thermostats, internet-connected fridges, and other smart-home devices and, on a bigger scale, smart cities.

The Industrial Internet of Things, or IIoT, connects machines and devices in industries such as transportation, power generation, manufacturing, and healthcare.

Typically, we’re interested in metrics generated by these devices. For example, we collect some air pollution levels (PM2.5, PM10) and send them to Sematext Cloud. But logs emitted from these devices are important as well: did this sensor start correctly? Does it need recalibration? How many times did sensors fail in the last 6 months? Based on this information, what is the most reliable manufacturer? These are just some examples of metadata that can be extracted from IoT logs.

Why Is Log Management Important: Key Benefits & Use Cases

Log management provides insight into the health and compliance of your systems and applications.

Without it, you’d be stumbling around in the dark hoping to pinpoint sources of performance issues, bugs, unexpected behavior, and other similar issues. You’d be forced to manually inspect multiple log files while trying to troubleshoot production issues. This is painfully slow, error-prone, expensive, and not scalable.

Log management is especially important for cloud-native applications because of their dynamic, distributed, and ephemeral nature. Unlike traditional applications, cloud-native applications often run in containers and emit logs to standard output instead of writing them to log files. This means you don’t have the “default option” of manually grepping logs. Typically, you’d capture the logs and ship them to a centralized log management solution.

In a nutshell, log management enables application and infrastructure operators (developers, DevOps, SysAdmins, etc.) to troubleshoot problems and allows business stakeholders (product managers, marketing, BizOps, etc.) to derive insights from data embedded in log events. Logs are also one of the key sources of data for security analytics – threat detection, intrusion detection, compliance, network security, etc., collectively known as SIEM (Security Information and Event Management).

To fully understand the importance of log management, we’ve gathered some of the main benefits below:

Monitoring and Troubleshooting

The most common and core log management use case is software application and infrastructure troubleshooting. Log events go hand in hand with application monitoring and server monitoring. Developers, DevOps, SysAdmins, and SecOps utilize both metrics and logs so that they are alerted about application and infrastructure performance and health issues, but also to find the root cause of those issues. Having good log management tools helps reduce MTTR (Mean Time To Recovery) which in turn helps improve user experience. Long downtimes or even applications and infrastructure that perform poorly can also cause profit loss. Thus, log management software plays a critical role in reducing MTTR.

Logs provide value beyond troubleshooting, though. If you have your logs structured – either from the source, or parsed in the pipeline – you can extract interesting metadata. For example, we often look at slow query logs during Solr or Elasticsearch consulting. Then we can answer lots of questions, like which kinds of queries happen more often, which queries are slow, what’s the breakdown per client, or do we have “noisy” clients? All this helps us optimize the setup, from architecture to queries. If all goes well, we end up with a more stable, faster and more cost-effective system. And we make our own production support job easier!

A less “technical” source of logs can be a sales channel. If we log what clients do at every step – along with client metadata – we can optimize. How many of those creating an account end up logging in? Can they successfully use our service, or should we improve our onboarding? Are there specific categories of clients (e.g. from a region of the world) that seem to have trouble? We can derive these insights if we centralize logs.

Log Management vs. APM

Although logging and monitoring overlap, it’s important to note that they are not different words for the same process. While both are critical to understanding what went wrong with your system, they each have different purposes. Logging deals with managing the data inside logs and making it readable and available at all times. Monitoring, or application performance monitoring (APM), takes this data and analyzes it to paint a picture of how the system behaves and helps you track down the cause.

There are separate tools you can use for each function or you can opt for an all-in-one solution such as Sematext Cloud that features functionalities required to perform both logging and monitoring efficiently.

Improved Operations

<

p class=”p”>As applications and systems become more and more complex, so does the size and difficulty of your operations. SecOps, SysAdmins, and DevOps would have a harder time monitoring everything “manually”, thus requiring more time and financial resources.

With logging, you can identify trends across your whole company’s infrastructure, allowing you to adapt early and come up with solutions that prevent “fires” vs having to “put them out”.

Better Resource Usage

When it comes to system performance problems, system overload is always like a dark cloud looming over. However, you need to keep in mind that it’s not always your software at fault but rather the requests you have on your server. Whether there are too many or too complex, your system can have difficulties dealing with them.

In this case, what log management does is help track resource usage. You can then see when your system is close to being overloaded so you can better allocate your resources.

Performance monitoring can let you know if there are performance issues, for example, that 90th percentile queries are slow. They may also reveal bottlenecks. To stick with the example, you may find that IO is overloaded when queries are slow. That said, you’ll need query logs to get more actionable insight, such as the content of the more expensive queries, how much data do those queries touch and how many of them run in parallel. Unlike metrics, with logs you have more metadata to filter and visualize.

User Experience

As with the previous example, one of the biggest headaches people report with applications is long response times to queries or not getting a response at all. Log management allows you to monitor requests at any level (API, database, etc.) and see which are underperforming. This enables you to step in and understand why such issues occurred, thus keeping you in control of your users’ experience.

Interested in a solution that helps you ensure a flawless user experience?

Sematext Logs offers real-time alerting on both metrics and logs to help detect issues even before they affect end-users.

Get started

See our plans

Free for 14 days. No credit card required

Understand Site Visitor Behavior

Log management, along with real user monitoring (RUM), can help track your users’ journey through your site or platform so that you can gain insight into their behavior and improve their experience. Here, log management and real user monitoring (RUM) complement each other.

RUM tools provide access to the user’s perspective, such as the number of visitors you’ve had on your site, which pages they spent the most time on, if there are changes in the number of visitors and much more.

From logs, you have access to metadata closer to your business logic: how many users ended up paying, how backend requests looked like, etc. By correlating these two sources of data, you can spot opportunities such as when to launch a new product, when to close your site for maintenance or when to offer discounts.

At Sematext, we offer both RUM and log management under the same roof – Sematext Cloud. You can build dashboards with information from both.

Extra Security

There’s no such thing as too much protection when it comes to IT security. Log analysis is at the heart of any SIEM solution: from network, system and audit logs to application logs. Anomalies here may signal an attack. Logs help security administrators diagnose anomalies in real time by providing a live stream of log events.

So whenever someone is attempting to breach your walls – whether it’s from the inside or an external threat, you’ll have more insight into what actually happened. You can also get alerted before anomalies happen, so you can react before issues escalate.

Security Audit and Logging Policy

The best way to ensure compliance with security and audit requirements is to create a logging and monitoring policy.

A log management policy sets security standards for audit logs, including system logs, network access logs, authentication logs, and any other data that correlates a network or system events with a user’s activity. More specifically, it provides guidelines as to what to log, where to store logs, for how long, how often logs should be reviewed, whether logs should be encrypted or archived for audit purposes, and so on. Such policies make it easier for teams to gather accurate and meaningful insights that help detect and react to suspicious access to, or use of, information system or data

Ensure Compliance with Regulations

Seeing as virtual attacks are becoming more and more difficult to detect and solve, it’s critical that your company meets compliance requirements of security policies, audit, regulation, and forensics.

Some of the most important are HIPPA (Health Insurance Portability and Accountability Act), PCI DSS (Payment Card Industry Data Security Standard), and GDPR (General Data Protection Regulation). Furthermore, increasing regulations require that you collect log data, store it and protect it against threats while having it available for audit. Otherwise, if a data breach happens, your company could be susceptible to profit loss as well as hefty fines due to various regulations put in place by several organizations.

Log management will help alert the right people of any suspicious activity concerning user data.

We wrote an article about logging best practices you should follow to ensure compliance with GDPR, if you want to learn more.

Popular Log Management Topics

[guides_card post_id=”46492″]

[guides_card post_id=”50747″]

Log Management Lifecycle: How Does It Work?

Log management has 5 key functions that, if followed, will ensure your logging and monitoring go smoothly. Let’s review what those 5 elements are:

Log Collection

As mentioned above, all your systems and applications generate log files at any given moment, which may be stored in various locations on your software stack, operating system, containers, cloud infrastructure, and network devices.

By log collection, we mean either pulling data from a source (e.g. a log file) or accepting data sent from that source (through a UNIX or network socket). Then, you can send it over to the next hop in your pipeline.

The log collector (or shipper) would have to at least be able to buffer the data somehow, in case it can’t talk to the destination. Sometimes it’s a good idea to do some parsing and enriching close to the source as well. But we’ll talk more about these in the next section: log aggregation.

Related log shipping articles:

- Top 5 most popular log shippers

- How to ship Kibana server logs to Elasticsearch

- Using Filebeat to send Elasticsearch logs to Sematext

- Android SDK for log shipping & analytics

- iOS SDK for log shipping & analytics

- Logging libraries vs log shippers

- How to ship Heroku logs to Sematext Logs / Managed ELK Stack

Log Aggregation to Centralized Log Storage

The next key element in log management is log aggregation.

A typical log aggregation pipeline has the ability to:

- collect logs from the needed sources, as we described above.

- buffer logs, in case there are network or throughput issues.

- parse logs to transform them into a format that can be indexed. For example, Elasticsearch consumes JSON, so you need to transform your logs into JSON

- optionally, enrich them with various metadata. For example, by knowing the IP of a source you can tag the company department of that host or its geo-location.

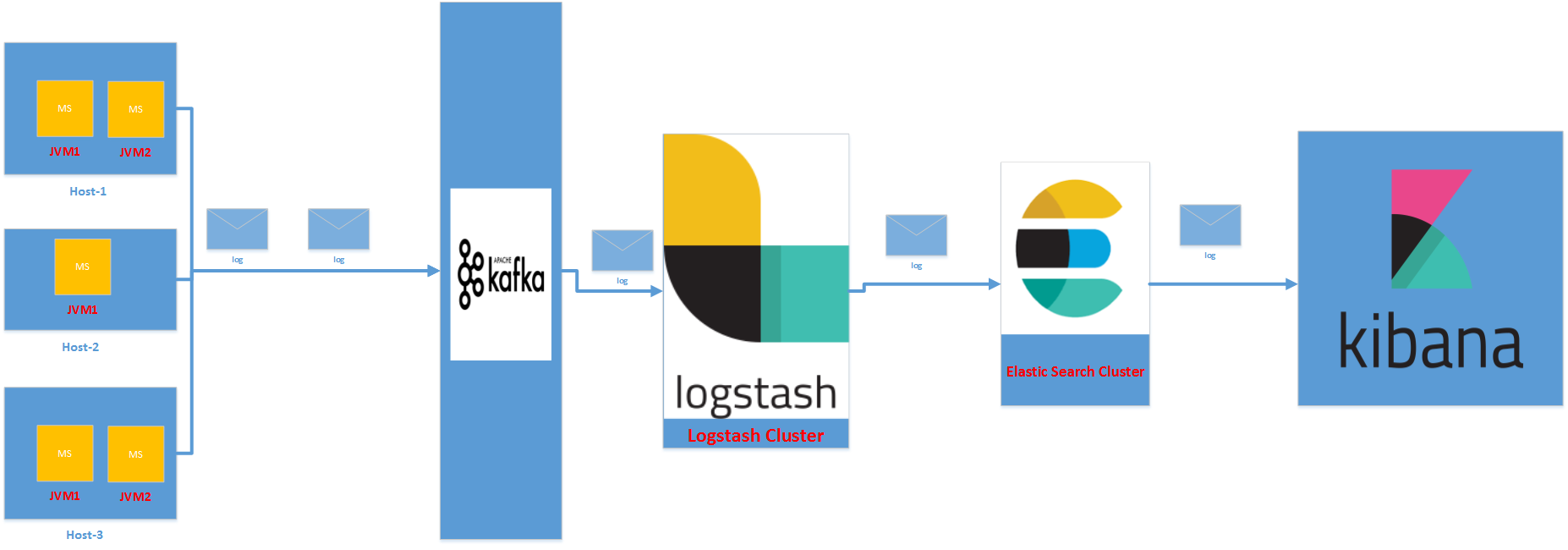

You may or may not want to separate these roles. Here are some examples of architectures:

- do everything close to the source. This will automatically scale with the number of sources but may become problematic if you have limited resources (e.g. network or mobile devices). As example, you can have a lightweight log shipper, such as rsyslog or Logagent installed on every host that generates logs.

- have dedicated server(s) that does buffering, parsing, and enriching. Preferably in this order, so data can be buffered if processing is too expensive. An example of this design is a centralized Logstash receiving data from a lightweight log shipper, such as Filebeat.

- have dedicated buffering, typically a Kafka cluster. A (lightweight) log shipper will push data to Kafka. On the other end, you have a Consumer (e.g. Logstash or Logagent) in charge of parsing, enriching, and shipping data to the final storage.

That final storage can be deployed in-house, like your own Elasticsearch or Solr. Or it can be a managed service, like Sematext Cloud. A managed service may take care of parts of the pipeline for you. For example, you can send syslog directly from your devices to Sematext Cloud, where it gets buffered, parsed, and indexed. It can also get automatically backed up to your AWS S3 bucket, for archiving/compliance reasons.

We get into more details about this step of the log management process in our dedicated guide about log aggregation.

Log Search and Analysis

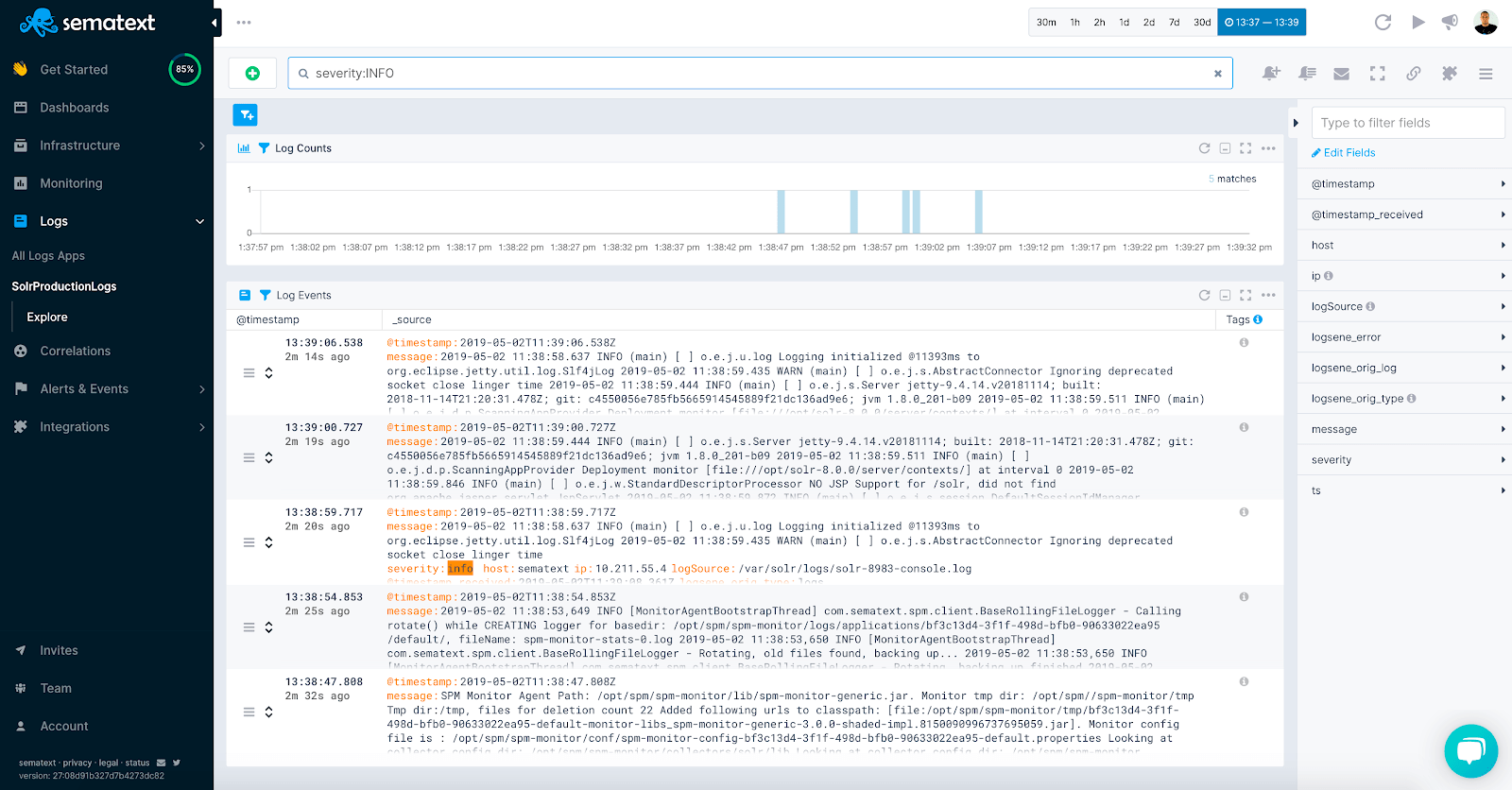

Stored and indexed, your aggregated log files are now searchable. Typically through a structured language such as the Lucene Query Syntax used in Sematext Cloud. This makes it easier for you to dive into root cause analysis.

Log analysis can be more than just search. Even while troubleshooting, it’s often useful to be able to visualize the breakdown of data: does the overall volume spike at some point? How about the traffic volume? Or the number of errors per host? If your logs are structured at the time of indexing, you can get all this information and more.

If you’re interested in learning more about this particular stage of the log management process, head out to our log analysis tutorial.

Log Monitoring and Alerting

Log management helps keep you on your toes, constantly providing data about how your systems and applications are performing. It also keeps you informed whether your infrastructure is working normally or if there are activity anomalies or security breaches.

A key part of this process is that it allows you to set up rules and alerts so that the right teams or people are notified in real-time to take measures before users are affected.

For example, a rule could be to alert your security team whenever a certain number of logins fail or the sales staff when too many people abandon their shopping carts.

Related articles:

- Recipe: Log alerting and anomaly detection

- Log alerting, anomaly detection, and scheduled reports

- Guide to metrics, monitoring, and alerting

Log Visualization and Reporting

All team members – and other cross-functional team members – should have access to the same information so everyone is on the same page. Reports and visualization make everything that happens behind the scenes accessible for everyone, including people outside the IT department.

When building reports for business stakeholders you’ll be able to show data trends as time series line charts, group data and draw tasty pie charts. Not to mention, graphs, visual representation of trends and dashboards have a much higher impact – such as when you see a huge spike – on decision-makers.

Having a clear picture of how large volumes of data perform over time makes it easier to spot trends or anomalies in behavior. You can then just skip to the log line that’s at fault for the spike.

Looking for a solution that makes it easier to get better insights out of logs?

Sematext Logs features rich dashboards and reporting capabilities that help detect anomalies faster as well as extract business KPIs.

Try it free for 14 days

See our plans

No credit card required

Log Management Challenges in Modern IT Environments

With applications moving from monolithic to microservices architecture, you’ll keep adding more complex layers on top of the primitive infrastructure. This in turn makes it increasingly difficult to gain visibility into your stack. While cloud computing and virtually “unlimited” storage have solved some of the traditional log management challenges, modern applications bring an additional and different set of challenges

- Distributed systems generate huge volumes of data that are both expensive to store and difficult to query.

- Log collection, shipping, monitoring, and alerting must all be done in real time so that teams can jump into troubleshooting immediately.

- Cloud-based architecture requires efficient logging, alerts, automation, analysis tools, proactive monitoring, and reporting which are not supported by traditional log management.

- Logs come in all kinds of different formats that need to be supported.

Luckily, modern log management systems with their 5 functions – log collection, aggregation, search and analysis, monitoring and alerting, visualization and reporting – can help overcome these logging challenges. However, these functions must be built on top of cloud principles like high availability, scalability, resiliency, and automation. Furthermore, years of experience and trial and error have revealed some tips and tricks you can use for efficient logging even when dealing with such complex environments. Let’s look into that next.

Log Management Policy

A log management policy provides guidelines as to what types of actions need to be logged in order to trace potential fraud, inappropriate use, and so on. More specifically, it states what to log, where to store logs and for how long, how often logs should be reviewed, whether logs should be encrypted or archived for audit purposes, and so on. Implementing an enterprise-wide logging policy ensures proper operation that makes troubleshooting easier, faster, and more efficient.

At the same time, it helps reduce costs. It can be difficult to get the right level of visibility while keeping the costs at a minimum. The more logs you collect, the higher the budget you should assign to your monitoring needs. Therefore, gathering logs based on a logging policy enables you to have rich and proper information for your use cases.

Usually, logging policies are required for certain certification standards, but even if you’re not subject to regulation or certification, it still is important to know what logs to monitor since it enables you to investigate fraud and the privacy of your users is into play.

Implementing a log management policy is a three-step process: establish the policy, communicate it throughout the company, then, put it in play. What you must have in mind though, is that you need different policies for audit requirements and security requirements.

Why Use Log Management Tools

As mentioned before, you can go through all the steps of log management on your own. However, unless you use log management tools, you will need to invest a lot of time and energy.

Log management solutions can handle the entire log management process while giving you the option to personalize each step depending on your needs. Furthermore, they allow you to visualize and enrich logs, making them easily searchable for both troubleshooting and business analytics. Not to mention, centralized logging solutions feature real-time anomaly detection and alerting so that you can pinpoint issues before they even affect the end user.

How to Choose a Log Management Software: Basic Features & Requirements

There are a lot of vendors that offer log management as a service for DevOps, both free, open source and paid.

What do these tools have in common? There are some basic requirements you should look for when searching for a log management software:

- Easy onboarding and integration with pre-existing systems

- Support SSL encryption for data in transit and role-based access

- Compliant with relevant regulatory requirements

- Intuitive user experience

- Fast and intuitive search and filtering features

- Support advanced analytics such as machine learning and anomaly detection to deal with big data challenges

- Scalable and flexible as the volume of data grows without increasing costs

- Real-time alerts and notifications

Sematext as a Centralized Logging Solution

Sematext Cloud is a full-stack observability cloud-based platform that bridges the gap between infrastructure monitoring, tracing, log management, synthetic monitoring, and real user monitoring, improving efficiency and granting actionable insights faster. You can easily identify and troubleshoot issues before they affect your users and spot opportunities to drive business growth – we encourage you to explore it in more depth here.

If you want to see how it stacks against similar solutions, have a look at our reviews:

Next Steps

Hopefully, this guide helped you understand what log management is, how it works, and how to choose the right tools. The next step is to pick the right tool and start logging. And since it’s best to set the foundation just right from the very beginning, Check out some logging best practices you should follow to reap the full benefits of your log data.

Related articles: