PostgreSQL is an open-source relational database management system that’s been utilized in continuous development and production for 30 years now. Nearly all the big tech companies use PostgreSQL, as it is one of the most reliable, battle-tested relational database systems today.

PostgreSQL is a critical point in your infrastructure, as it stores all of your data. This makes visibility mandatory, which in turn means you have to understand how logging works in PostgreSQL. With the help of the logs and metrics that PostgreSQL provides, you can achieve visibility.

In this article, I’ll explain everything you need to know about PostgreSQL logs, from how to enable them to how to format and analyze them easily.

What Are PostgreSQL Logs?

PostgreSQL logs are text files showing you information related to what is currently happening in your database system. This includes who has access to what component, what errors have occurred, what settings have changed, what queries are in process, and what transactions are being executed.

To get a bird’s-eye view of these logs, you can ship them to a centralized place and then have a way to search across all of them. Parsing lets you retrieve important information and metrics, which you can then plot to better visualize as data points.

This article will show you how to tweak your settings in PostgreSQL using both a config file and a command line interface. It is recommended to make all these changes using exclusively the config file, otherwise your changes may be lost when you restart your server.

PostgreSQL Log Location

Out of the box, PostgreSQL will show the logs in stderr, which is not very convenient since they’ll get mixed up with other processes logging to stderr as well. To enable PostgreSQL to create its own log files, you have to enable the logging_collector parameter. When you do, logs will start going to the default location defined by your OS. Below are the default log directories for a few different operating systems:

- Debian-based system:e /var/log/postgresql/postgresql-x.x.main.log. X.x.

- Red Hat-based system: /var/lib/pgsql/data/pg_log

- Windows: C:\Program Files\PostgreSQL\9.3\data\pg_log

To change the location where the log files are stored when the log collector is enabled, you can use the log_directory parameter to specify a custom directory.

Note that logging can sometimes be a problem in PostgreSQL. The logging collector will not allow any log messages to be lost, so at high load, it can block server processes, resulting in issues. You can use syslog instead, as it can drop some messages and will not block the system. To disable the logging collector, you can configure the option to off:

logging_collector off

Depending on your use case, you might want to change the location of your PostgreSQL logs. Common options here include logging to syslog, CSV, Windows Event, and Docker, all discussed further below.

Syslog

You can easily configure PostgreSQL to log to syslog facilities. You need to do this on the syslog daemon via the following configuration:

local0.* /var/log/postgresql

You can use parameters like syslog_facility, syslog_indent, syslog_sequence_number in the PostgreSQL configuration file to format the logs.

CSV Log

If you want to upload logs to an analysis tool or program, you might want to save logs to a CSV file. CSV is well defined, making this process easy. To switch your logs to CSV, you have to add the following line in the PostgreSQL configuration:

csvlog /log/postgresql.csv

You can also create a table on top of these logs and then use SQL to query for specific conditions.

Windows Event Log

For PostgreSQL systems running on Windows, you can send logs to the Windows Event Log with the following configuration:

log_destination = 'stderr, eventlog'

Make sure to register the event source system in the Windows OS so it can retrieve and show you event log messages using Windows Event Viewer. To do this, use the command:

regsvr32 pgsql_library_directory/pgevent.dll

Docker

Nowadays, many tools and databases are run as Docker applications, PostgreSQL included. You can also run the Docker version of PostgreSQL easily on Kubernetes or any other container orchestration platform. However, in such cases, you don’t want to make changes directly in the pods or containers because those changes can be lost when the pods restart. Instead, you have to pass the configs during the start of these containers.

To enable logging, you have to pass the configurations using the ConfigMaps in Kubernetes. Follow this blog to deploy PostgreSQL on Kubernetes and enable/disable various settings.

What Is Important to Log?

Logging a lot of information can lead to a waste of time if you are not able to point out which logs are important and which are not. It’s very important to reduce the noise in logging to achieve faster debugging—this will also save you time and resources to store those logs.

Logs should show you slow queries, log levels, and how to catch critical information with minimal logging. You can do this by using filters, the most common of which are log thresholds, log levels, statement duration, and sampling. Let’s delve a bit into each of these.

Slow Query Thresholds

PostgreSQL can log queries that are taking more time than a defined threshold. Identifying slow log queries helps discover issues with the database and why there are lags in your application.

To enable this, you need to edit the postgresql.conf file. Find the log_min_duration_statement line, and tune it per your needs. For example, the below statement will log all the queries that are taking more than 1 second:

log_min_duration_statement = 1000

After this, save the file and reload PostgreSQL. Your settings will be applied, and you will be able to see logs for slow queries in your PostgreSQL log files.

You can also set this dynamically using the PostgreSQL query interface via the following command:

ALTER DATABASE db SET log_min_duration_statement = ‘1000ms';

Statement Duration

You can easily log the duration of each statement being executed in PostgreSQL. To do this, add the below statement to your configuration to enable logging of each statement:

log_statement all

Another option to accomplish this is by running the following PostgreSQL statement:

ALTER DATABASE db SET log_statement = ‘all';

Note that this will enable the logging of all statements queried, meaning it may not be that useful and simply create a lot of noise.

Instead, you may want to log per the type of query, like DDL or MOD. DDL consists of CREATE, ALTER, and DROP statements, while MOD includes DDL plus other modifying statements.

Sampling

With sampling enabled, you can log sample statements that cross a particular threshold. If your server generates a huge amount of logs due to different events happening, you don’t want to log everything that crosses just any threshold. Instead, you can log a sample of statements that cross a particular threshold. This helps in maintaining lower I/O in logging and less noise in the logs, making it easier to identify which kinds of statements are causing an issue.

You can control these thresholds and sampling via options in the postgresql.conf file like log_min_duration_sample, log_statement_sample_rate, and log_transaction_sample_rate. Check the PostgreSQL’s documentation to see how to use these parameters. You also have the option of making these changes via the command line of PostgreSQL.

Note that this can also be a pitfall, as sampling can result in missing the one statement causing the issue. In such scenarios, you will not be able to find the problem, and debugging will take more time than usual.

PostgreSQL Log Levels

PostgreSQL offers multiple log alert levels based on the severity of the event. You can change the log level of PostgreSQL using the log_min_error_statement parameter in the PostgreSQL configuration file, selecting any of the following levels:

- DEBUG1, DEBUG2, DEBUG3… DEBUG5: Gives developers more detailed information

- INFO: Retrieves specific data requested by a user like verbose output

- NOTICE: Offers useful information to users like identifier truncation

- WARNING: Delivers warnings of likely problems

- ERROR: Registers errors, including those that cause any command to abort

- LOG: Logs data like checkpoint activity, which can be useful for the administrator

- FATAL: Occurs for errors that caused the current running session to abort

- PANIC: Occurs for errors that cause all database sessions to abort

If you are sending logs to Windows eventlog or syslog, the log-severity level will be changed as follows:

- DEBUG1… DEBUG5 will be translated to DEBUG in syslog and INFORMATION in eventlog.

- INFO will be INFO in syslog and INFORMATION in eventlog.

- NOTICE will be NOTICE in syslog and INFORMATION in eventlog.

- WARNING will be NOTICE in syslog and WARNING in eventlog.

- ERROR will be WARNING in syslog and ERROR in eventlog.

- LOG will be INFO in syslog and INFORMATION in eventlog.

- FATAL will be ERR is syslog and ERROR in eventlog.

- PANIC will be CRIT in syslog and ERROR in eventlog.

Apart from the log levels, it’s really important to understand what type of logs are generated by PostgreSQL. This helps you know what kind of logs you should look at if you see a certain kind of problem.

Log Types

There are multiple types of PostgreSQL logs you need to consider while debugging issues. You can divide them into two types: admin-specific logs and application-user-specific logs.

Admin-specific logs help manage the PostgreSQL server. If the server is not working properly, these can provide the reason for this and aid in troubleshooting.

There are two types of admin-specific logs:

- Startup logs: These show all the important events and any issues (for example, due to any misconfigurations) during the startup process of your PostgreSQL server.

- Server logs: These can help you identify anything going wrong with the PostgreSQL server at runtime from an admin perspective. They are located in the default location of your installation or as prescribed by you in the PostgreSQL configuration file.

When it comes to application-user-specific logs, there are several important PostgreSQL logs to keep an eye on:

- Query logs show you all the queries that have occurred in the server; you can see the logged queries if you have

log_statementenabled. - Transaction logs are the record of all events performed on the database; they follow the WAL (write ahead log) standard, which is not meant to be human readable. WAL is a way to keep a record of all actions performed on the database and can be used to recover from a catastrophic failure. The

pg_receivexlogplugin can show the transaction logs streamed by your PostgreSQL server. - Connection logs are useful to find any unwanted connections to the server. You can enable

log_connectionsin thepostgresql.conffile to log each attempt to connect to your server;log_disconnectionslets you see all the clients that disconnected from the server. - Error logs help you identify if any of your queries create unwanted issues in the server;

log_min_error_statementcontrols the error statement logging severity level. - Audit logs and access logs are critical from the admin’s point of view. The former show changes made to the database, while the latter identify who made what queries; these can be enabled via the

log_statementconfiguration or a PostgreSQL plugin like pgAudit.

You’ll find most of these log types in the default log locations or the location that you define in the postgresql.conf file. There are also multiple open-source projects I like using together with PostgreSQL for better log file analysis like pgBadger.

Just keeping a log won’t cover all cases. You also need to look at how you will archive or rotate your logs. PostgreSQL supports log rotation, as discussed in the next section.

PostgreSQL Log Rotation

PostgreSQL can rotate logs with the help of some basic configuration parameters it offers. With options like log_rotation_age, log_rotation_size, and log_truncate_on_rotation, you can easily configure at what point you want to rotate your logs. For example:

log_rotation_age 60 #default unit is minutes, this will rotate logs every log_rotation_age 300 #rotate the logs after the time mentioned.

You can also use the CLI to set this configuration.

As already mentioned, understanding your logs is a necessary step in identifying issues, and to best do this, you need to understand log formatting. In PostgreSQL, you can easily define the log format per your given needs.

How to Format Logs

PostgreSQL has the option to log in CSV format and generate a CSV file, which you can then use to put the logs in a table and use SQL on top of it.

Apart from this, the log_line_prefix parameter lets you format the beginning of each log line in the postgresql.conf file or via the command line. Configurable parameters include application name, username, database name, remote host, backend type, process ID, etc. The whole list of options is available in PostgreSQL’s documentation. For example:

log_line_prefix = '%m [%p] %u@%d/%a '

The above log_line_prefix means logs will begin with the time in milliseconds, then process ID, username, database name, and application name.

Log formatting, thresholds, sampling, log levels, and log types will all help you in debugging issues. But you ideally need a tool that allows you to aggregate and analyze all of these logs and view the output via one dashboard rather than having to go to each server. One such tool is Sematext. Let’s look at how you can gain from PostgreSQL logging with Sematext.

PostgreSQL Logging with Sematext

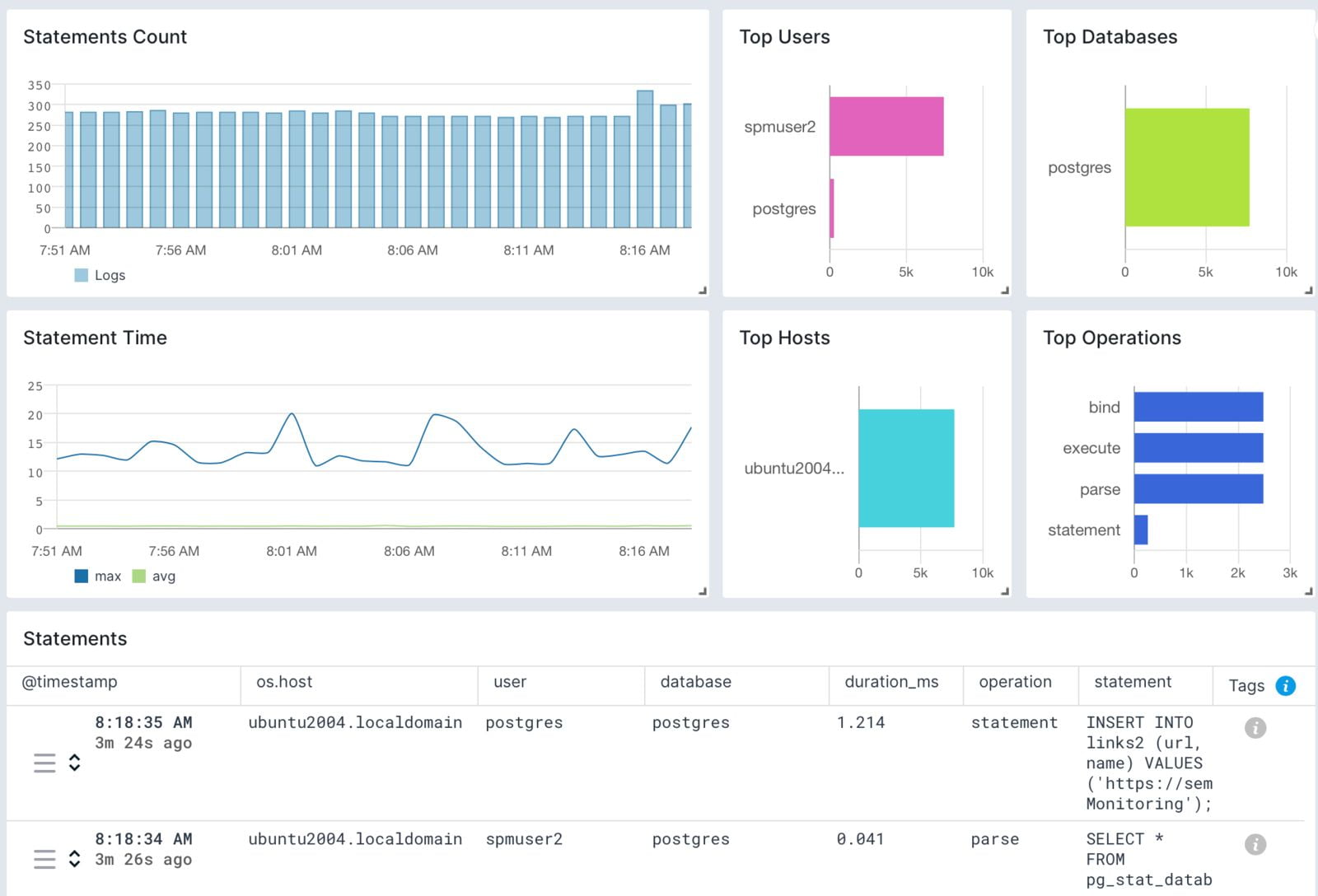

PostgreSQL logging with Sematext

Sematext Logs is a log management and monitoring solution that lets you aggregate logs from various data sources across your infrastructure in one place for viewing and analysis.

Sematext features service auto-discovery so you just have to install the Sematext agent on your servers, perform some basic configuration, and your PostgreSQL logs will start flowing to Sematext and be presented via an intuitive, out-of-the-box dashboard. You can even easily create a custom dashboard, set up alerts, and send the alerts to different notification channels like Gmail, Slack, or PagerDuty.

Sematext also offers features like anomaly detection, which helps you identify issues in advance and then take action to prevent them from happening. For better insight, you can correlate PostgreSQL logs with PostgreSQL metrics to detect bottlenecks faster. That way, you get a bird’s-eye view of your PostgreSQL machines for easier troubleshooting and debugging.

Sematext Logs is part of Sematext Cloud, a full-stack logging and monitoring solution that allows you to gain visibility into and integrate your entire IT environment. Besides databases, it supports integration with a wide variety of tools, including HAProxy, Apache Tomcat, JVM, and Kubernetes. Plus, you get support for Kubernetes deployments, so it will be easier for you to monitor your installation in a Kubernetes environment.

Conclusion

Keeping an eye on PostgreSQL logs is a critical part of database troubleshooting. By understanding how queries made and statements executed, as well as traffic, connections, errors, and other changes or events on your server, you can easily drill down to problematic processes and discover the root cause of your performance issues

You can track logs in various ways, like using less or tail on the log files, but this will become tough to manage when logs are spread across multiple files and machines. You need logs in one place, and a solution like Sematext Logs can help you achieve this.

Try Sematext out for free, and see how it can help your organization with log management and analysis.

Author Bio

Gaurav Yadav

Gaurav has been involved with systems and infrastructure for almost 6 years now. He has expertise in designing underlying infrastructure and observability for large-scale software. He has worked on Docker, Kubernetes, Prometheus, Mesos, Marathon, Redis, Chef, and many more infrastructure tools. He is currently working on Kubernetes operators for running and monitoring stateful services on Kubernetes. He also likes to write about and guide people in DevOps and SRE space through his initiatives Learnsteps and Letusdevops.