OK, so you want to hook up rsyslog with Logstash. If you don’t remember why you want that, let me give you a few hints:

- Logstash can do lots of things, it’s easy to set up but tends to be too heavy to put on every server

- you have Redis already installed so you can use it as a centralized queue. If you don’t have it yet, it’s worth a try because it’s very light for this kind of workload.

- you have rsyslog on pretty much all your Linux boxes. It’s light and surprisingly capable, so why not make it push to Redis in order to hook it up with Logstash?

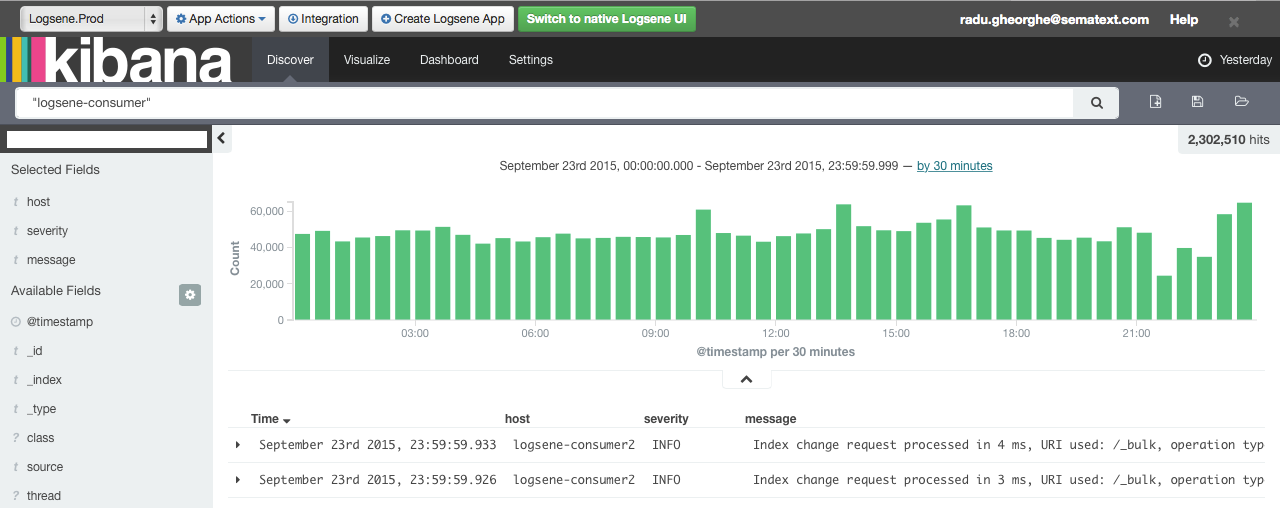

In this post, you’ll see how to install and configure the needed components so you can send your local syslog (or tail files with rsyslog) to be buffered in Redis so you can use Logstash to ship them to Elasticsearch, a logging SaaS like Logsene (which exposes the Elasticsearch API for both indexing and searching) so you can search and analyze them with Kibana:

Getting the ingredients

First of all, you’ll probably need to update rsyslog. Most distros come with ancient versions and don’t have the plugins you need. There are packages for most popular distros but you can also compile from sources. At the time of this writing, there’s no package for omhiredis (the Redis output module in rsyslog), so compiling from sources is the only option:

./configure --enable-omhiredis # add other options if you want # like --enable-imfile for file tailing. try ./configure --help for all the options

At this point the configure script may cry for missing dependencies. Look for the $NAME-devel packages of your distro, and make sure you have the rsyslog repositories in place anyway, because they provide needed packages like json-c. Once configure runs successfully, you’d compile and install it:

make make install

Now you can start your new rsyslog (stop the old one first) via /usr/local/sbin/rsyslogd. Run it with -v to check the version (you’d need at least 8.13 for this tutorial), add -n to start it in foreground and -dn to start in debug mode.

With rsyslog out of the way, you’d install Redis. Again, I had it from sources following the instructions here.

Feel free to poke around redis.conf (I’ve increased the buffer limits for pub/sub clients, which we’ll use here) and then start it via

src/redis-server redis.conf

Finally, you’ll have Logstash. At the time of this writing, we have a beta of 2.0, which comes with lots of improvements. No compiling needed here, we’d just poke around with logstash.conf and start logstash via

bin/logstash -f logstash.conf

Configuring rsyslog

With rsyslog, you’d need to load the needed modules first:

module(load="imuxsock") # will listen to your local syslog module(load="imfile") # if you want to tail files module(load="omhiredis") # lets you send to Redis

If you want to tail files, you’d have to add definitions for each group of files like this:

input(type="imfile" File="/opt/logs/example*.log" Tag="examplelogs" )

Then you’d need a template that will build JSON documents out of your logs. You would fetch these JSONs on the other side with Logstash. Here’s one that works well for plain syslog:

template(name="json_lines" type="list" option.json="on") {

constant(value="{")

constant(value=""timestamp":"")

property(name="timereported" dateFormat="rfc3339")

constant(value="","message":"")

property(name="msg")

constant(value="","host":"")

property(name="hostname")

constant(value="","severity":"")

property(name="syslogseverity-text")

constant(value="","facility":"")

property(name="syslogfacility-text")

constant(value="","syslog-tag":"")

property(name="syslogtag")

constant(value=""}n")

}

By default, rsyslog has a memory queue of 10K messages and has a single thread that works with batches of up to 16 messages (you can find all queue parameters here). You may want to change:

– the batch size, which also controls the maximum number of messages to be sent to Redis at once

– the number of threads, which would parallelize sending to Redis as well

– the size of the queue and its nature: in-memory(default), disk or disk-assisted

In a rsyslog->Redis->Logstash setup I assume you want to keep rsyslog light, so these numbers would be small, like:

main_queue( queue.workerthreads="1" # threads to work on the queue queue.dequeueBatchSize="100" # max number of messages to process at once queue.size="10000" # max queue size )

And even with this, I’m getting at least 200K events per second pushed to Redis! Speaking of pushing to Redis, this is the last part:

action( type="omhiredis" mode="publish" # to use the pub/sub mode key="rsyslog_logstash" # we'd need the same key in Logstash's config template="json_lines" # use the JSON template we defined earlier )

Assuming Redis is started, rsyslog will keep pushing to it.

Configuring Logstash

This is the part where we pick the JSON logs (as defined in the earlier template) and forward them to the preferred destinations. So we have the input, which will point to the same Redis key we used in rsyslog:

input {

redis {

data_type => "channel" # use pub/sub, like we do with rsyslog

key => "rsyslog_logstash" # use the same key as in rsyslog

batch_count => 100 # how many messages to fetch at once

}

}

You may use filters for parsing your data (e.g. adding Geo information based on IP), and then you’d have an output for your preferred destination. Here’s how you can push to Logsene or Elasticsearch using the Elasticsearch output:

output {

elasticsearch {

hosts => "logsene-receiver.sematext.com:443" # it used to be "host" and "port" pre-2.0

ssl => "true"

index => "your Logsene app token goes here"

manage_template => false

#protocol => "http" # removed in 2.0

#port => "443" # removed in 2.0

}

}

And that’s it! Now you can use Kibana (or, in the case of Logsene, either Kibana or Logsene’s own UI) to search your logs!