Last updated on Jan 10, 2018

One of the things you learn when attending Sematext Solr training is how to scale Solr. We discuss various topics regarding leader shards and their replicas – things like when to go for more leaders, when to go for more replicas and when to go for both. We discuss what you can do with them, how to control their creation and work with them. In this blog entry, I would like to focus on one of the mentioned aspects – handling replica creation in SolrCloud. Note that while this is not limited to Solr on Docker deployment, if you are considering running Solr in Docker containers you will want to pay attention to this.

The Basics

When creating a collection in SolrCloud we can adjust the creation command. Some of the parameters are mandatory, some of them have defaults and can be overwritten. The two main parameters we are interested in are the number of shards and the replication factor. The former tells Solr how to divide the collection – how many distinct pieces (shards) our collection will be split into. For example, if we say that we want to have four shards Solr will divide the collection into four pieces, with each piece having about 25% of the documents. The replication factor, on the other hand, dictates the number of physical copies that each shard will have. So, when replication factor is set to 1, only leader shards will be created. If replication factor is set to 2 each leader will have one replica, if replication factor is set to 3 each leader will have two replicas and so on.

By default, Solr will put one shard of a collection on a given node. If we want to have more shards than the number of nodes we have in the SolrCloud cluster, we need to adjust the behavior, which we also can do by using Collections API. Of course, Solr will try to spread the shards evenly around the cluster, but we can also adjust that behavior by telling Solr on which nodes shards should be created.

Let’s look at this next. For the purpose of showing you how all of this works, I’ll use Docker and Docker Compose. I’ll launch four containers with SolrCloud and one container with ZooKeeper. I’ll use the following docker-compose.yml file:

version: "2" services: solr1: image: solr:6.2.1 ports: - "8983:8983" links: - zookeeper command: bash -c '/opt/solr/bin/solr start -f -z zookeeper:2181' solr2: image: solr:6.2.1 links: - zookeeper - solr1 command: bash -c '/opt/solr/bin/solr start -f -z zookeeper:2181' solr3: image: solr:6.2.1 links: - zookeeper - solr1 - solr2 command: bash -c '/opt/solr/bin/solr start -f -z zookeeper:2181' solr4: image: solr:6.2.1 links: - zookeeper - solr1 - solr2 - solr3 command: bash -c '/opt/solr/bin/solr start -f -z zookeeper:2181' zookeeper: image: jplock/zookeeper:3.4.8 ports: - "2181:2181" - "2888:2888" - "3888:3888"

Start all containers by running:

$ docker-compose up

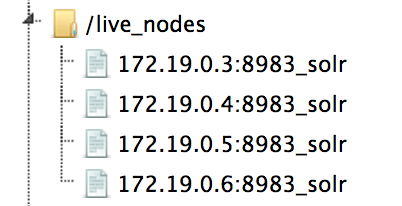

The command needs to be run in the same directory where docker-compose.yml file is located. After it finishes, we should see the following nodes in our SolrCloud cluster:

In order to create a collection we will need to upload a configuration – I’ll use one of the configurations provided by default with Solr and will upload them to ZooKeeper with this command:

$ docker exec -it --user solr 56a02fb8d997 bin/solr zk -upconfig -n example -z zookeeper:2181 -d server/solr/configsets/data_driven_schema_configs/conf

The 56a02fb8d997 is the identifier of the first Solr container. Btw, if you are not familiar with Solr and Docker together, we encourage you to look at the slides of our Lucene Revolution 2016 talk “How to run Solr on Docker. And Why”. The video should also be available soon.

We can finally move to collection creation. Let’s try to create a collection built of 4 primary shards. That is easy, we just run:

$ curl 'localhost:8983/solr/admin/collections?action=CREATE&name=primariesonly&numShards=4&replicationFactor=1&collection.configName=example'

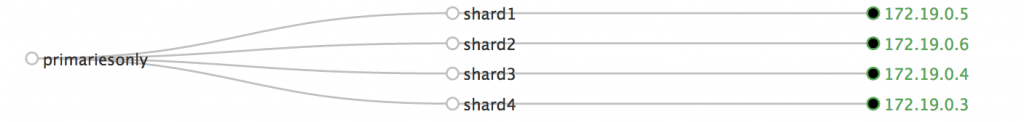

The collection view, once the command is executed should look as follows:

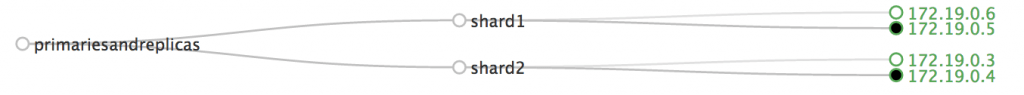

We can of course create collection divided into fewer leader shards, but with replicas:

$ curl 'localhost:8983/solr/admin/collections?action=CREATE&name=primariesandreplicas&numShards=2&replicationFactor=2&collection.configName=example'

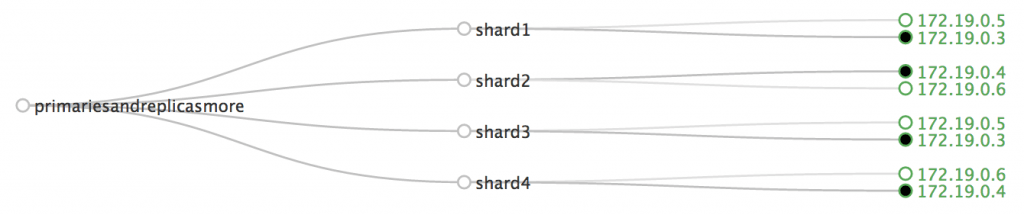

And have more shards than we have nodes:

$ curl 'localhost:8983/solr/admin/collections?action=CREATE&name=primariesandreplicasmore&numShards=4&replicationFactor=2&maxShardsPerNode=2&collection.configName=example'

The key point here is this: once you created a collection you can add more replicas, but the number of leader shards will stay the same. This statement is true even if we are using the compositeId router and if we don’t split shards. So how can we add more replicas? Let’s find out.

Adding Replicas Manually

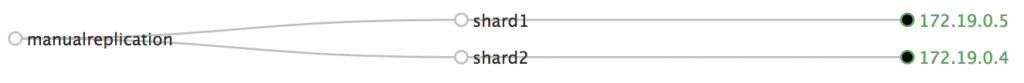

The first idea is to add replicas manually by specifying which collection and shard we are interested in and where the replica should be created. Let’s look at the collection create with the following command:

$ curl 'localhost:8983/solr/admin/collections?action=CREATE&name=manualreplication&numShards=2&replicationFactor=1&collection.configName=example'

To add a replica we need to say on which node the new replica should be placed and which shard it should replicate. Let’s try creating a replica for shard1 and place it on 172.19.0.3. To do that we run the following:

$ curl 'localhost:8983/solr/admin/collections?action=ADDREPLICA&collection=manualreplication&shard=shard1&node=172.19.0.3:8983_solr'

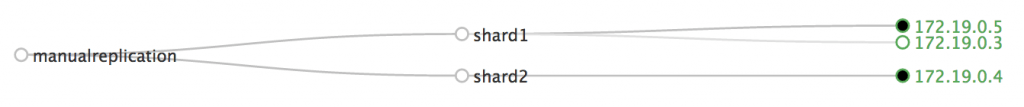

After executing the above command and waiting for the recovery process to finish we would see the following view of the collection:

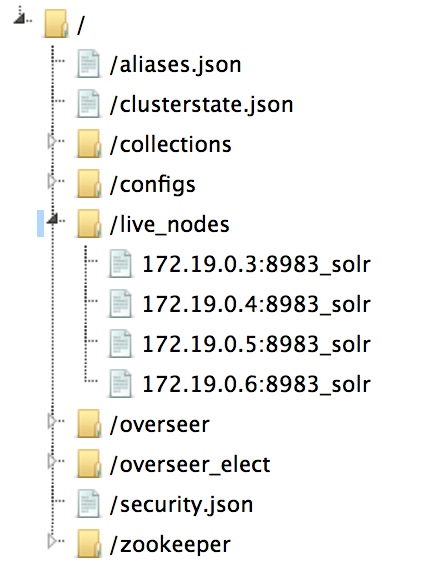

Of course, you may ask where to get the node name. You can retrieve it from the /live_nodes node in ZooKeeper:

Please remember that specifying the node name is not mandatory. We may actually let Solr choose the node for us. We still have to manually add replicas when we add machines though. Can this be changed? Automated?

Automatically Adding Replicas

it is possible to automatically create new replicas when SolrCloud is working on top of a shared file system. We do that by adding the autoAddReplicas=true to the collection creation command, just like this:

$ curl 'localhost:8983/solr/admin/collections?action=CREATE&name=autoadd&numShards=2&replicationFactor=1&autoAddReplicas=true'

On a shared file system, Solr will automatically create replicas. Unfortunately, it is not possible to automatically spread Solr collection when new nodes are added to the cluster when Solr is not running on a shared file system.

Controlling Shard Placement

We’ve mentioned earlier that we can assign shards when creating the collection. We can do it in a semi-manual fashion or using rules. The semi-manual way is very simple. For example, let’s create a collection that is placed on nodes 172.19.0.3 and 172.19.0.4 only. We can do that by running the following:

$ curl 'localhost:8983/solr/admin/collections?action=CREATE&name=manualplace&numShards=2&replicationFactor=1&createNodeSet=172.19.0.3:8983_solr,172.19.0.4:8983_solr'

And the resulting collection would look as follows:

![]()

We can do more than this. We can define rules that will be used by Solr to place shards in our SolrCloud cluster. There are three possible conditions for rules, which should be met for the replica to be assigned to a given node. Those conditions are:

- shard – the name of the shard or wild card. Tells Solr to which shards the rule should be applied, if not provided it will be used for all shards.

- replica – a * wildcard or number from 0 to infinity

- tag – attribute of a node, which should match in a rule

There are also rule operators, which include:

- equal, for example, tag:xyz, which means that tag property must be equal to xyz

- > – greater than, which means that the value of the property must be higher than the provided value

- < – less than, which means that the value of the property must be lower than the provided value

- ! – not equal, which means that the value of the property must be different than the provided value

We will see how to use these rules and operators shortly.

To fully use the above rules and operators we need to learn about snitches. Snitches are values coming from plugins that implement Snitch interface and there are a few of those provided by Solr out of the box:

- cores – number of cores in a node

- freedisk – disk space available on a node (in GB)

- host – name of the host on which the node works

- port – port of the node

- node – node name

- role – role of the node, during the writing of the article the only possible value here is overseer

- ip_1, ip_2, ip_3, ip_4 – part of IP address, for example in 172.19.0.2, the ip_1 is 2, ip_2 is 0, ip_3 is 19 and ip_4 is 172

- sysprop.PROPERTY_NAME – property name provided by -Dkey=value during node startup

We configure snitches when we create collection.

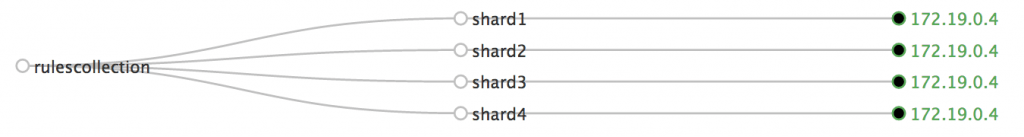

For example, to create a collection and only place shards on nodes that have at least 30GB of disk space, and have IP that starts with 172 and ends with 4, we would use the following collection create command:

$ curl 'localhost:8983/solr/admin/collections?action=CREATE&name=rulescollection&numShards=4&replicationFactor=1&maxShardsPerNode=4&rule=shard:*,replica:*,ip_1:4&rule=shard:*,replica:*,ip_4:172&rule=shard:*,replica:*,freedisk:>30'

The created collection would look as follows:

The rules are persisted in ZooKeeper and will be applied to every shard. That means that during replica placement and shard splitting Solr will use the stored rules. Also, we can modify the rules by using collection modify command.

New Replica Types in Solr 7

Till now we’ve discussed how shards and replicas work in Solr, at least till Solr 7. With Solr 7 things changed and new replica types have been introduced. The default behavior remained the same, but there are ways of creating new replica types – the new TLOG and PULL replicas (learn more about Solr 7 replica types in Solr 7 New Replica Types blog post). This changes what we can do with our SolrCloud cluster. Using appropriate replica types we can control how much we can trade near real-time searching and indexing, fault tolerance and high availability for performance. If you don’t need near real-time searching and indexing you can TLOG replicas and still have FT & HA and a higher performance, because of binary files replication along with transaction log. You want to have replication similar to master-slave architecture on top of SolrCloud – go for PULL replicas. As you can see there are way more options available as soon as we switch to Solr 7.

Autoscaling in Solr 7

The next thing that is awesome regarding the sharding and replication in Solr 7 is the autoscaling API. If I were to describe it in one sentence I would say that it allows us to define rules that control shard and replica placement based on certain criteria. We can tell Solr to automatically add replicas and control the placement of the replicas based on attributes like disk space utilization, CPU utilization, average system load, heap usage, number of cores deployed on Solr instance, custom properties or even a specific metric retrieved using Solr Metrics API. The nice thing about that API is that the rules can be applied to the whole SolrCloud cluster or each collection individually.

That’s not all. We can configure triggers and listeners. For example, a trigger can be configured to watch for events such as a node joining or leaving our SolrCloud cluster. When such event occurs trigger will execute a set of actions. Listeners can be attached to triggers so that we can react to the triggers and the events themselves – for example for logging purposes.

Summary

As you can see, we have lots of possibilities when it comes to shard creation – from simple control to sophisticated rules based on out of the box values and ability to provide our own. For me, rules are the missing functionality in Solr that I was looking for when SolrCloud was still young. I hope it will be known to more and more users.

Want to learn more about Solr? Subscribe to this blog or follow @sematext. If you need any help with Solr / SolrCloud – don’t forget @sematext provides Solr Consulting, Production Support, and offers Solr Training!